The Agents Behind Hackerone Agentic PTaaS: Proven, Scalable, and Benchmarked

Pentesting has always depended on expert intuition and careful iteration, mapping an attack surface, forming hypotheses, validating exploitation paths, and turning proof into actionable fixes.

But as modern applications grow more complex and the attack surface expands faster than teams can keep up, scaling that workflow becomes the bottleneck.

Today, we launched HackerOne Agentic PTaaS to deliver continuous, expert-verified pentesting at enterprise scale. Agentic PTaaS provides continuous security validation through autonomous agents augmented by elite human expertise, producing high-confidence findings grounded in real, exploitable risk.

Our agents form an autonomous pentesting system-as-a-service designed to behave less like a “scanner that runs faster” and more like a skilled security researcher, systematically exploring an application, testing what matters, and producing verifiable findings teams can act on.

In fact, the HackerOne PTaaS agent delivered approximately 88% accurate, fix-verified findings with low false positives against our benchmark suite. Compared to a leading frontier model approach using Claude Sonnet 4.5, our agent doubled the performance and reduced noise to zero.

Agentic PTaaS enables better and more frequent coverage, helping teams address risks more effectively.

The Agent Framework That Keeps Testing Adaptive and Auditable

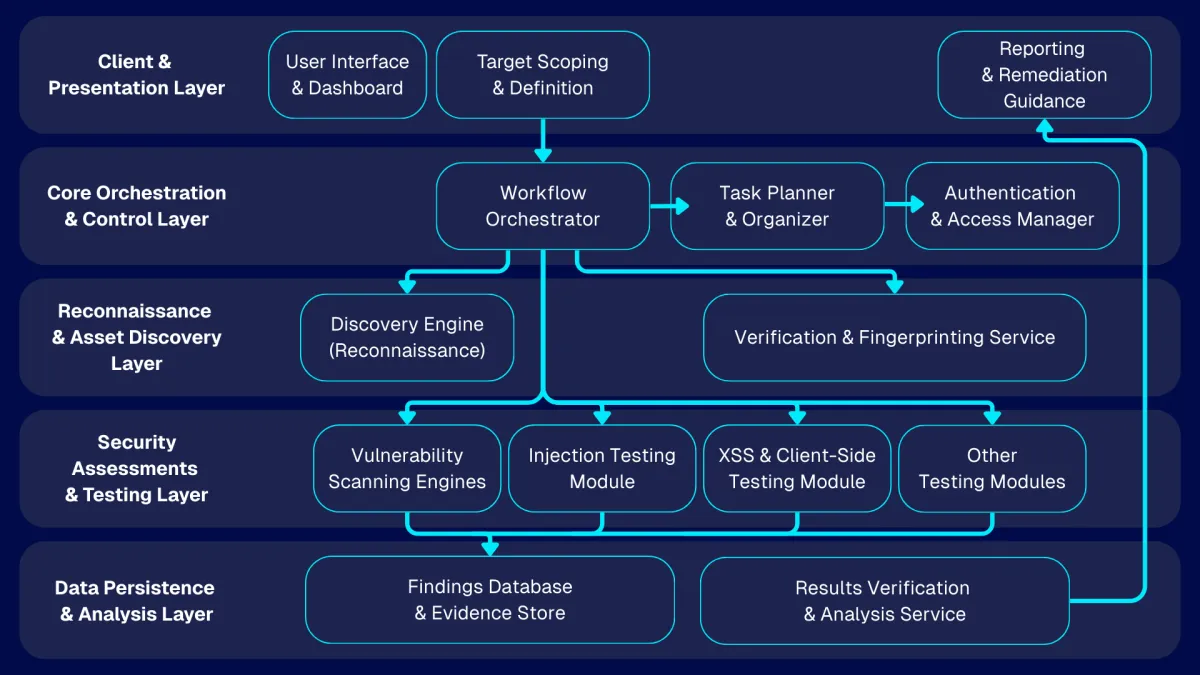

The agents powering HackerOne agentic PTaaS is a cooperative multi-agent system (MAS) designed to mirror how an experienced security researcher approaches an application, systematically, iteratively, and with a tight feedback loop between discovery and validation.

Under the hood, it’s a hierarchical multi-agent system: instead of one “do everything” model, multiple specialized agents collaborate across the full testing lifecycle. A master orchestrator coordinates the sequence of work, routes tasks to the right specialist, and aggregates results into a single, coherent view of risk.

This structure lets the system scale coverage while keeping each agent focused, predictable, and easier to reason about.

At the core is a stateful workflow graph (implemented as a directed graph of steps) where each node represents an agent or a bounded function, and each transition is driven by the current testing context.

The shared state carries what the system has learned so far—target scope, authenticated session context (when provided), discovered endpoints, input vectors, and emerging hypotheses—so downstream agents can build on upstream evidence. Each specialist follows a “reason + act + observe” loop: the LLM proposes the next best step, executes the appropriate tooling through controlled interfaces, and then updates the shared state with structured outputs that the rest of the system can reuse.

- The workflow typically begins with optional authentication, where the agent can automate login and capture session context to enable testing behind access controls.

- Next comes reconnaissance and validation, combining proven security utilities (for enumeration, probing, crawling, and baseline checks) with browser automation for realistic interaction and evidence capture.

- A dedicated planning layer then converts recon output into a prioritized set of test “indicators” (hypotheses such as “this input looks like it may be vulnerable”), and the orchestrator fans these out to specialized testing workflows (e.g., injection, XSS, authorization checks) that execute targeted validation and collect proof.

- Finally, a reporting layer produces a public-consumable narrative: what was tested, what was confirmed, supporting evidence, and clear remediation guidance.

A key architectural principle is safety by design. Tool access is scoped to each agent’s mission, workflows include verification steps to reduce false positives, and the orchestrator can enforce allowlists, rate controls, and conservative operating modes.

Combined with checkpointing and observability hooks, this keeps long-running assessments resilient and auditable while still enabling adaptive testing that learns from each intermediate result and refines its approach as it goes.

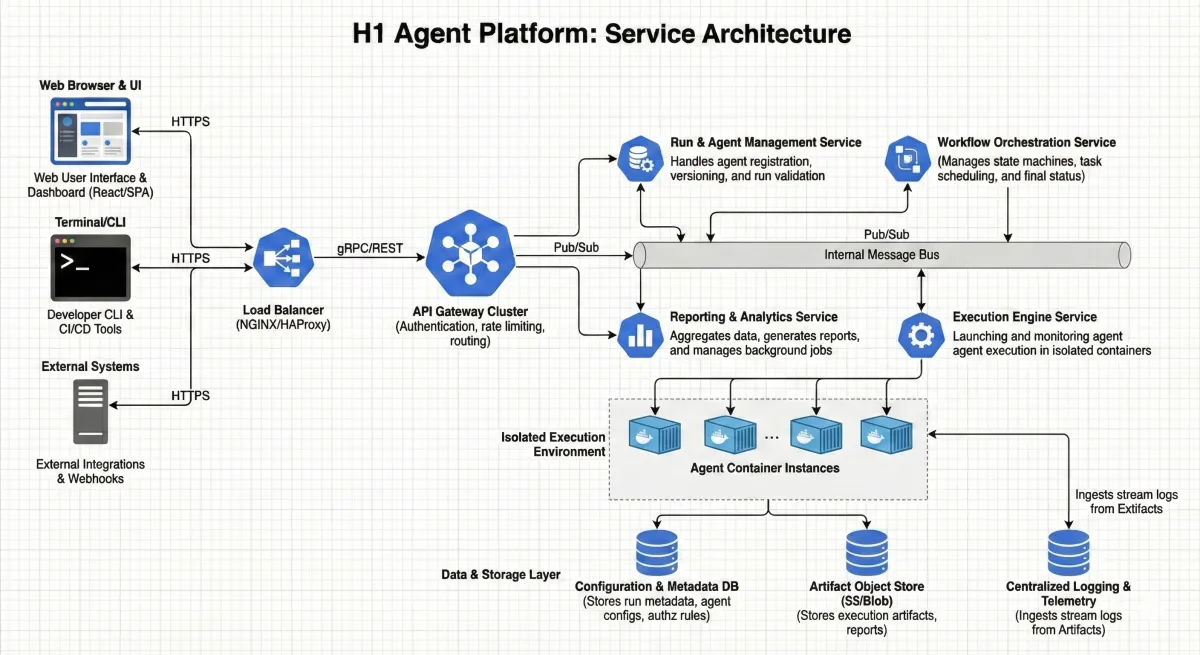

The Three-Tier Platform Built for Reliable, Long-Running Agent Runs

HackerOne’s autonomous agent platform is the control plane that safely runs security agents at scale, handling run requests, policy enforcement, execution, monitoring, and results delivery.

Rather than coupling these concerns into a single service, the platform uses a three-tier architecture that separates:

- The external API surface area

- Reliable orchestration of long-running executions, and

- A HackerOne-facing management experience

This keeps the system modular. Teams can evolve the user experience, orchestration logic, or execution environment independently while maintaining a consistent contract for launching and tracking runs.

At the front door, a dedicated API gateway layer accepts execution requests from the web UI, CLI, or CI/CD systems. This tier validates inputs, enforces multi-tenant access controls, applies quotas/rate limits, and records run metadata. It also serves as the “single place” for clients to query run status and retrieve artifacts, so callers don’t need to understand the underlying orchestration or compute details. The platform’s data plane is intentionally simple here: run metadata lives in a durable key-value store, and artifacts (reports, logs, screenshots, and other evidence) are stored in object storage under tenant-scoped prefixes.

The orchestration layer is where reliability and scale come from. A serverless state machine coordinates the full lifecycle of each run: claiming work independently, launching an isolated container execution environment, monitoring completion, and updating authoritative run state. This design supports long-running or bursty workloads without requiring always-on workers, and it provides built-in patterns for retries, timeouts, cancellations, and checkpoint/resume. Execution happens in containerized, isolated runtimes that can scale up or down automatically, giving each run a predictable resource envelope while keeping tenant boundaries intact.

Finally, the management layer provides the operational surface for teams: a dashboard to create and track runs, background jobs to aggregate logs and generate exports, and tooling to support batch operations. Because it talks to the platform via the same APIs clients use, it stays decoupled from the internals of orchestration. Across all tiers, the platform emphasizes enterprise-grade guardrails—tenant scoping from identity through storage paths, structured audit trails, and comprehensive observability (logs, metrics, traces)—so autonomous testing can be operated safely and transparently in production environments.

A Repeatable Benchmark Framework Built to Mirror Real-World Risks

To build trustworthy autonomous security agents, we need a benchmarking system that is repeatable, fair, and representative of the web security problems teams face in production.

HackerOne’s approach combines breadth and realism: a large library of deliberately vulnerable applications across many vulnerability classes and difficulty levels, executed in isolated environments so results are comparable over time.

Each benchmark has a clear, machine-verifiable success condition, which allows us to measure progress across agent versions and configurations without relying on subjective scoring.

First Pillar: Public Benchmarks

Leveraging widely used, community-recognized vulnerable apps and challenge sets make it easier to compare techniques and validate claims with shared baselines. Public benchmarks provide an important common ground for the industry: when a result improves, everyone can understand what changed and why.

We also incorporate challenges that map to well-known security taxonomies (like common OWASP-style categories), so it’s easy to analyze where an agent is strong, where it’s brittle, and what types of bugs are still difficult.

Second & Third Pillars: HackerOne Internal Benchmarks and Custom Vulnerable Apps

These benchmarks let us test scenarios that mirror the complexity and patterns we see in real engagements.

These include custom vulnerable apps that emphasize realistic application behavior (authentication flows, multi-step business logic, API patterns, and database-backed state) while still being safe, isolated, and deterministic.

Internal benchmarks help ensure we’re not overfitting to a single public suite and can continuously add new cases as attacker techniques and web stacks evolve.

Fourth Pillar: Public CVEs

Public CVEs allow for reproductions of real-world vulnerabilities in controlled, containerized environments.

CVE-based benchmarks connect “benchmark success” to security outcomes practitioners recognize, and they help validate that agents can move beyond toy examples to vulnerabilities with real exploitation constraints.

Across all pillars, the goal is the same: establish a consistent yardstick that supports rapid iteration, tracking success rates, time-to-signal, and reliability, while keeping evaluations reproducible and hard to game.

Fifth Pillar: Side-Car Runs

Side-car runs are enabled by HackerOne’s active penetration testing business. Because HackerOne operates a large, ongoing pentesting program, we have strong relationships with customers who are motivated to engage as Design Partners.

This allows us to run the PTaas agentic agents alongside real customer assets, under explicit permission and scoped controls, and evaluate performance using real application behavior and data.

These side-car runs provide direct feedback on signal quality, false positives, exploit realism, and report usefulness, ensuring our benchmarks remain tightly aligned with real customer risk and production security outcomes.

A Practical Path to Continuous, Verified Security

Autonomous penetration testing only works when intelligence, infrastructure, and measurement evolve together.

With the agents powering HackerOne Pentest as a Service (PTaaS), we bring those pieces into a single system: a multi-agent AI that mirrors how experienced pentesters think and work, a production-grade platform that executes safely and at scale, and a rigorous benchmark suite grounded in real vulnerabilities and real exploitation paths.

Together, they create a foundation for continuous, repeatable security validation, one that helps teams move faster without sacrificing rigor, scale testing without losing context, and anchor AI-driven findings in reproducible, evidence-based results.

As autonomous security research continues to mature, this integrated approach ensures pentesting remains transparent, measurable, and tightly aligned with the realities of modern offensive security.

See how HackerOne's Agentic Pentest as a Service (PTaaS) drives real continuous validation.