AI red teamers unpack common misconceptions about AI red teaming and explain its role in securing AI-powered applications.

Notification Message

Hai Agentic - Smarter AI for Faster Risk Reduction |

Main navigation

- Platform

-

Bug Bounty

Continuous Researcher-led Testing

-

Pentest as a Service

Human-led & Agentic Pentests

-

Response

Vulnerability Disclosure Program (VDP)

- Solutions

- Use Cases

- Adversarial Exposure Validation

- AI Security, Safety & Trust

- Application Security

- Cloud Security

- Continuous Security Testing

- Continuous Vulnerability Discovery

- Crowdsourced Security

- CTEM

- Vulnerability Management

- Web3

- Industries

- Automotive & Transportation

- Crypto & Blockchain

- Financial Services

- Public Sector

- Healthcare

- Retail & E-Commerce

- Hospitality & Entertainment

- US Federal

- UK Government

-

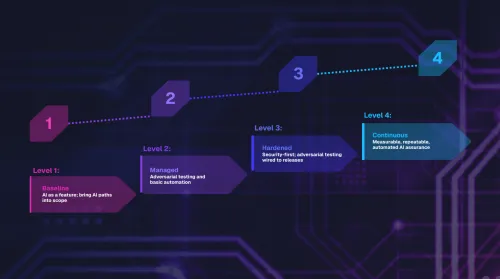

HeadingThe Future of AISub HeadingA Security GuideCTA Component

- Partners

- Researchers

- Resources

-

HeadingResearch ReportSub HeadingBenchmarks & insights from 500K vulnerability reports.CTA Component

- Company

- Get Started

- Login

Platform

Solutions

Partners

Researchers

Resources