How AI Security Is Transforming Offensive Security: Insights to Carry Into 2026

Over the last decade, vulnerability research has moved through distinct phases. Earlier editions of the Hacker-Powered Security Report from HackerOne captured the rise of coordinated disclosure, the first generation of bug bounty programs, and the shift toward API-heavy and cloud-native applications.

The 9th Hacker Powered Security Report continues the steady increase in high-impact findings pattern, but signals a new shift. Organizations are integrating AI into their products at an unprecedented rate, and researchers are incorporating AI into their workflows just as quickly.

This two-sided acceleration is reshaping the conditions under which vulnerabilities are discovered. The latest report shows that AI is influencing both what researchers test and how they test it, and this is visible across nearly every dataset in the report.

Automation and Hackbots Are Expanding Baseline Coverage

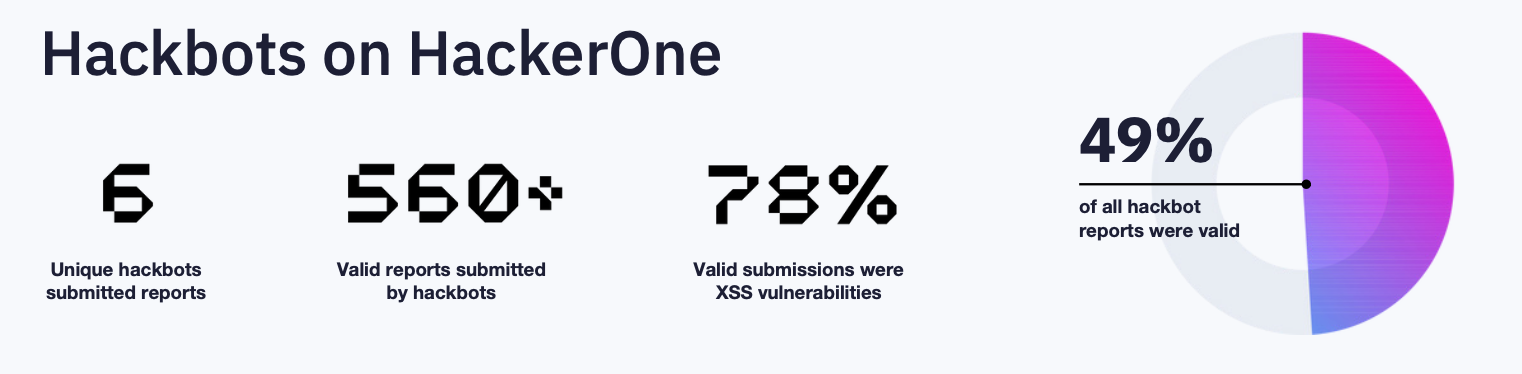

The 9th report includes one of the clearest signals yet about the growing role of automated agents in vulnerability discovery. Hackbots are now a measurable part of the offensive security ecosystem.

During the reporting period, more than 1,100 hackbot submissions were received, and nearly half were valid. That level of automated, precise contribution would have been difficult to imagine only a few years ago.

The report shows where hackbots are strongest. They excel at probing vulnerabilities that map cleanly to known patterns, which is why 78% of valid hackbot findings were XSS. They are becoming effective at clearing the baseline. HackerOne has updated its leaderboard to include collectives and automated contributors, reflecting the practical reality that automation now plays a visible role in crowdsourced security.

Two-thirds of researchers expect hackbots to enhance their work rather than replace it, and 43% view them primarily as tools for simpler vulnerabilities. Automation is improving and will continue to grow more capable, but the high-impact discoveries still come from human insight, curiosity, and the ability to piece behaviours together across components.

Hackbots increase coverage whilst researchers continue to push the frontier.

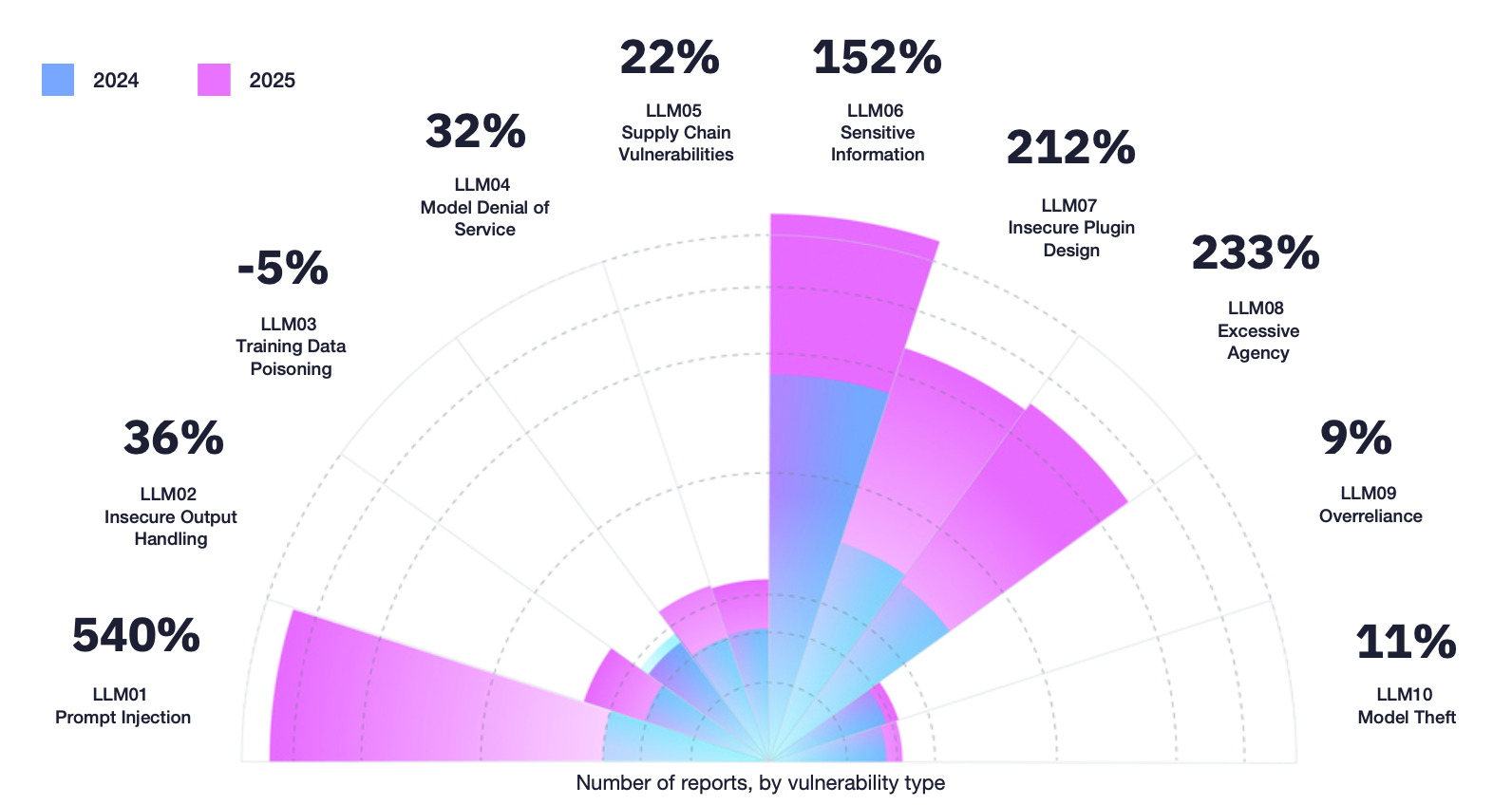

AI Is Now a High Impact and High Reward Surface

The most striking trend in the 9th report is the growth of AI-related vulnerabilities. Valid AI findings increased by 210% year over year. Prompt injection rose by 540%. More than 1,121 programs included AI in scope or had a valid AI report in 2025, a 270% increase compared to the previous year.

These findings reflect a broader reality. AI features create behaviors that do not follow fixed paths. Issues arise from prompt interpretation, model decision making, retrieval failures and AI tools performing unintended actions.

These trends mirror real-world incidents observed outside formal vulnerability programs. High-profile examples, from large-scale model jailbreaks and agent misuse in plugin-enabled systems to supply chain compromises like Shai-Hulud 2.0, highlight how small integration failures can cascade into real-world impact. These are the same classes of issues now surfacing more frequently in paid security reports.

Researchers are identifying weaknesses in components that companies have never previously considered part of their security surface. The demand for AI related testing is clear. The payouts are real.

Researchers who understand AI behavior are finding issues that matter to program owners.

AI Is Transforming the Way Security Researchers Work

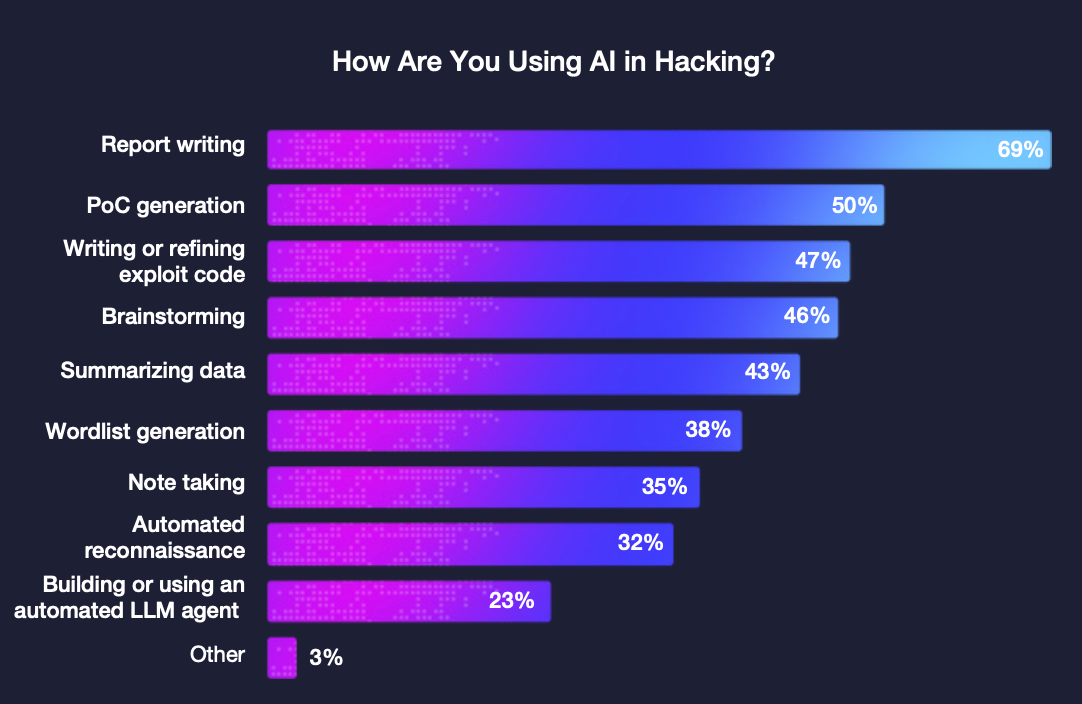

The 9th report shows how AI is altering the way vulnerability research is performed. More than two-thirds of surveyed researchers now use AI or automation tools to accelerate reconnaissance, speed up testing, and reduce repetitive tasks.

These tools remove friction, accelerate ideation, and allow researchers to explore broader hypothesis spaces without slowing down.

This trend extends well beyond the bug bounty ecosystem. Recent reporting from Anthropic on state sponsored threat actors use of AI shows how threat actors are operationalising AI to scale and automate, increasing their operational sophistication to new heights.

The same workflow acceleration that makes researchers more productive also exists on the threat actor side, and that pressure inevitably pushes defensive research and bug bounty work to evolve in the same direction. The coming years will likely see researchers integrate AI more deeply into their methodologies to keep pace with expanding attack surfaces and also to match the increasing speed and scale seen in adversarial use of AI.

At the same time, the latest report highlights a significant limitation: AI output remains inconsistent without human oversight. Reports written entirely by AI are often polished but technically shallow, and triage teams can identify them quickly.

The most impactful findings still come from researchers who understand what they are testing, have an intuitive understanding of why things behave the way they do, and where the underlying weaknesses lie.

AI can support speed, but it cannot supply judgment or system understanding.

Architecture and Logic Flaws Are Increasing in Value

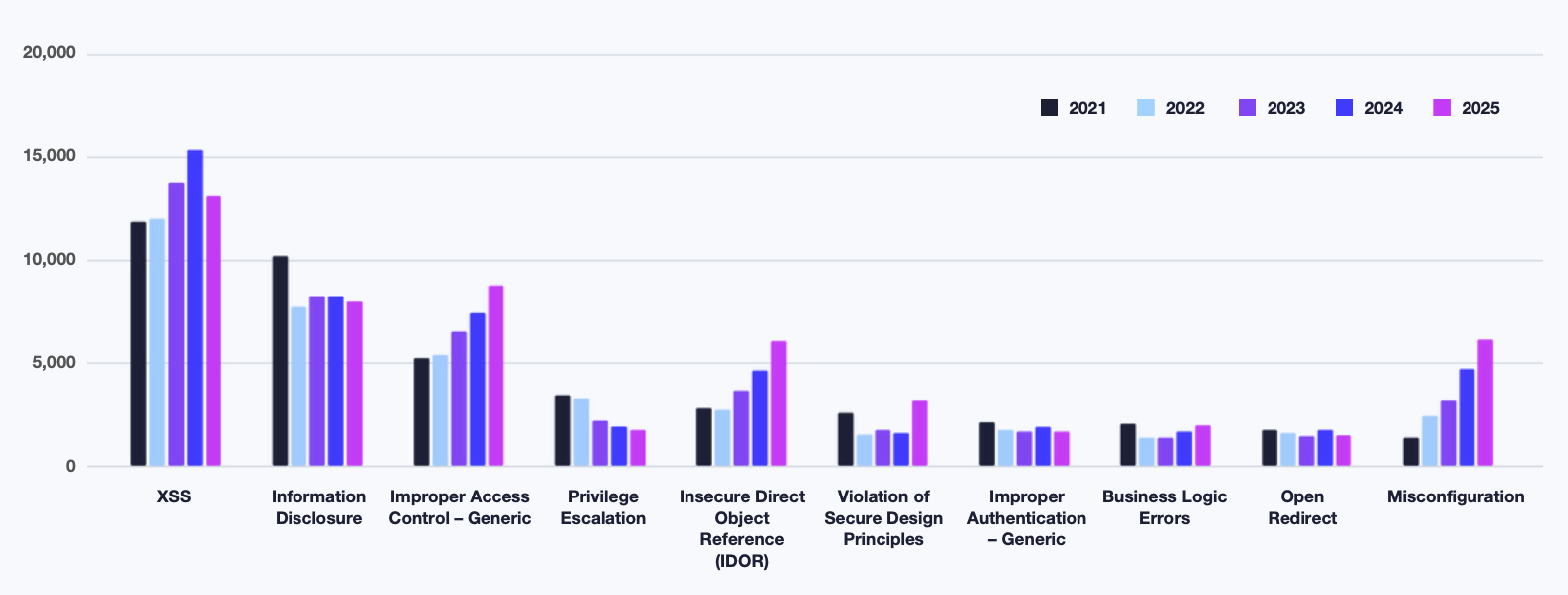

Five year trend data in the report shows a rise in vulnerability categories that require understanding how systems work rather than how payloads break them.

Traditional issues like XSS have declined from their peak in 2024. Findings involving access control issues, IDOR variations and misconfigurations are becoming more common.

Modern applications rely on distributed services, identity layers, cloud environments and multi tenant constraints. Weaknesses between these components can produce significant impact. Researchers who understand how a workflow is intended to function and can identify where that intention fails continue to find the highest value vulnerabilities.

These findings also tend to earn stronger payout. Deeper understanding continues to be more valuable than surface level enumeration.

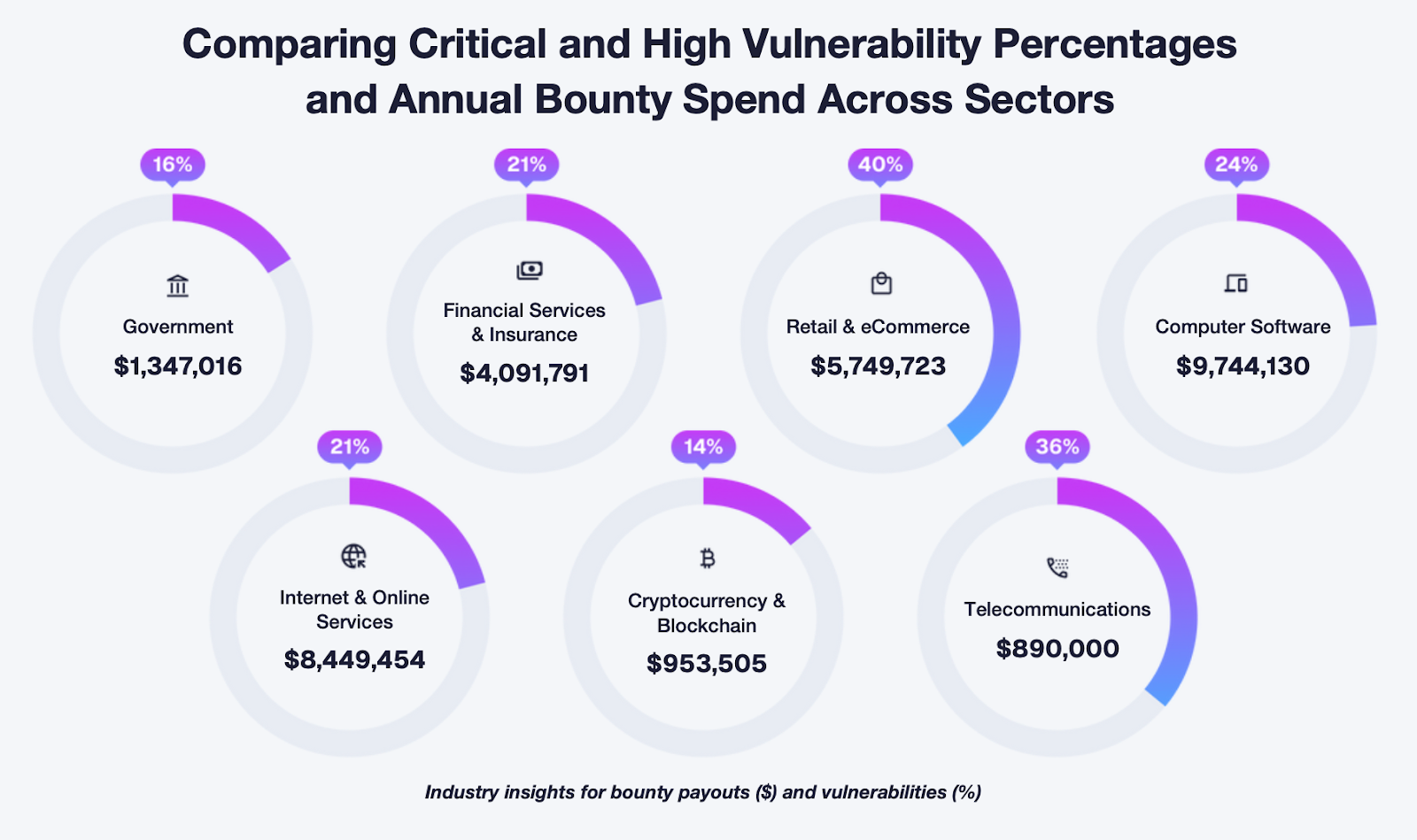

Incentives Still Shape Researcher Focus

The 9th report reinforces the relationship between reward consistency and researcher engagement. Programs that reduce payouts see a drop in valid and high severity findings. Programs that maintain strong rewards attract sustained, high quality participation.

- 73% of programs saw a drop in valid submissions

- 50% of programs saw a decline in critical submissions

Sectors with strong incentives include financial services, cryptocurrency, government and AI driven SaaS. These industries adopt new technology quickly and face high impact consequences when vulnerabilities appear. They also tend to maintain competitive reward structures, which signals to researchers that time invested will be worthwhile.

The Future Skillset Blends AI-Driven Efficiency With Human Insight

The most valuable skills in the coming years sit at the intersection of automation and depth. Researchers are using AI to strip away the tedious parts of vulnerability discovery. They are automating reconnaissance, scaling pattern analysis, clearing repetitive checks and creating more space for the work that actually moves findings forward.

This saved time is being redirected into deep understanding. High impact bugs still come from researchers who can interpret system behaviour, piece together complex interactions across components and recognise where real world workflows diverge from intended design. AI can increase reach and scale, but the strongest results still come from human reasoning applied to complex systems.

Researchers who combine efficient AI-assisted workflows with deliberate, focused investigation will be best positioned as modern applications continue to grow in complexity.

Building a Research Future Where AI Amplifies Human Expertise

The 9th Hacker-Powered Security Report highlighted how the landscape is evolving with a new wave of AI driven technologies. AI is enlarging the attack surface and accelerating researcher workflows. Agentic AI is improving baseline coverage but not replacing expert insight. Architecture and logic flaws are growing in importance. Incentives continue to shape where researchers invest time and focus.

Researchers who succeed in this environment will be those who stay curious and view AI as an empowerment to free up their time to do what they do best, hack.