AI Developers’ AMA: AI System Design and Development

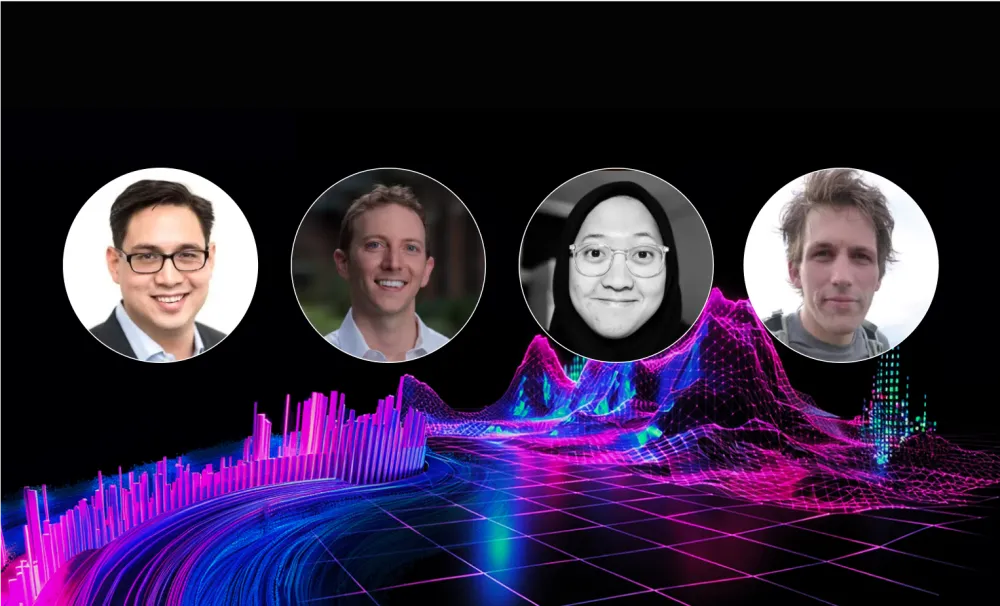

During our recent webinar, Beyond the Algorithm: AI Developers’ Ask-Me-Anything, four seasoned AI engineers discussed details and questions about applied AI and machine learning (ML). They focused on the technical challenges, opportunities for innovation, ethical considerations, and the identification and mitigation of algorithmic flaws based on their professional experiences. We were joined by engineer, creative technologist, and angel investor Luciano Cheng, founder of Frictionless Systems Carter Jernigan, and two of HackerOne’s own: Software Engineer of Applied AI/ML Zahra Putri Fitrianti and Principal Software Engineer Willian van der Velde.

This blog will highlight key learnings from the first section of the webinar, AI system design and development, which answered questions about:

- Assessing the need and selecting the appropriate AI model

- Challenges and best practices in AI adoption and implementation

- Practical AI applications and mitigation strategies

Q: What is tokenization, and how does it relate to AI models?

Luciano:

A token is the smallest potential value of the next input. When we train models, they aren’t trained on how to spell. They give it a set of words, which is the output's atomic level. "Token" is a term in ML/AI to represent the smallest thing that could be inputted or outputted.

Carter:

AI models think of words split into chunks called tokens. A token is roughly one-quarter of your word count. That’s how models think about it.

Q: How do you select the perfect AI model for your business?

Carter:

Before selecting an AI model, the more important question to ask is, ‘Are you sure you need AI to solve your problem?’ Many problems can be solved with traditional approaches, but there are plenty of cases where AI really does make sense.

When picking a Large Language Model (LLM), I choose the best, such as GPT4 or Claude Opus, and see what kind of results I get. It’s easy to get good results with good models. Then, I work backward to see if I can get similar results with smaller models to reduce my cost and inference time. From there, it comes down to what your business needs and requirements are in terms of cost, latency, licensing, hosting, etc.

Luciano:

Make sure you understand the data you’re putting into it and what your expectation is for the result. For example, here are two different problems that require different solutions:

If you have a fleet of trucks running different routes and are trying to solve a problem regarding a specific route, AI can only get you so far. A problem like this relies on external factors, such as individual drivers, which are outside AI’s control. AI can make it more efficient, but AI alone cannot solve that problem.

I once needed to reduce a lot of text down to a true/false answer as to whether or not a project was on time, but I put too much data into the model at once, so the model couldn’t tokenize and analyze all the data. This is why the decision about which model and whether or not to use AI is completely secondary to what the data, product, and expectations are.

Q: What are some of the most annoying challenges you’re facing with enterprise AI adoption?

Willian:

A challenge to internal adoption is answering how to empower our colleagues to develop products with AI? Externally, we have to answer how we sell AI-driven solutions.

We have to build trust with our [HackerOne platform] users. With LLMs, especially, organizations fear that they will do brand damage and hallucinate random facts. So, we have to be transparent about what systems we use and how we train our models in order to get customers to opt in to our feature set.

LLMs open a great opportunity to explore new features and value, but there is a risk of hallucination. We take a defensive approach to limit the number of hallucinations for enterprise customers.

Read more about the hacker perspective on Generative AI.

Luciano:

The first challenge is that data is never clean in the real world. People are surprised about how much clean data they need to tune an off-the-shelf model. Every time I start a machine learning project, I think I’m going to be doing really fun, sci-fi work, and instead, I’m data cleaning. You need data engineers, testing harnesses, data pipelines, a front end, etc. It can be very mucky work, but in the end, it can be very valuable if you choose the correct problem.

The second challenge is problem selection. Some problems lend themselves well to AI, and some do not. It’s counterintuitive to try to solve problems with AI that cannot be solved with AI. People can choose a product in a market and approach it with AI as a tool, and they find that it’s not the correct tool to use.

AI is best at taking something computationally intractable and reducing it to something more precise; summarization is a great example. Most technical people can use off-the-shelf LLMs with off-the-shelf tools to produce a summarization product. You can use data that is very imprecise to produce something of immediate value.

The opposite would be something like chess. The number of potential chess games is more than the number of atoms in the universe. AI can only get you so far; chess is already so precise and not tractable for AI, despite its apparent simplicity.

Carter:

There are many different use cases for LLMs, and a common one is answering questions on a data set. People often take their PDF, chunk it into a database, and use that to ask questions of the LLM. This generally works well, but after a while, you’ll notice the AI gets obsessed with certain things in your data set. Sometimes you need to modify the data to stop obsessing about that one thing and get a much better output. The original data needs to be tuned for better knowledge retrieval.

Q: How do you create or use a local LLM for your own private data?

Willian:

LangChain and Hugging Face are great resources and have many tutorials and libraries pre-built, especially for the fundamentals of Retrieval-Augmented Generation (RAG) and how to populate a RAG database.

Luciano:

I recommend using whatever you’re comfortable with (SQLite, DuckDB, Postgres), but I don’t recommend training your own model from scratch. It’s fascinating as an academic exercise but requires more resources than the average person. I highly recommend pulling an open-source model; otherwise, it’s going to take a long time and extensive resources.

Carter:

I like to split the problem apart. You’re trying to learn multiple things at once, and learning one thing at a time will make things easier. Start with OpenAI or GPT4, and don’t worry about doing it locally. If you’re concerned about the privacy of your data, use some other public data to experiment and figure out the process.

Q: Are LLMs actually knowledge models, or are they just models that understand language?

Luciano:

An LLM is not a person. It’s a tokenization system. It takes the data you give it and predicts the next token. It’s good at taking a bunch of context to make those predictions, more than the average human. I don’t love anthropomorphizing LLMs. They’re software like any other software.

Carter:

The best way to get the most out of LLMs is to remember that they operate off of text that you give them. Instead of treating them like a search engine like Google, it’s much better to give them text and ask them to process that text in a certain way. In that way, it is a language model operating on the information you gave it as opposed to trying to retrieve knowledge it was given in its original training.

Q: What are some “low-hanging fruit” use cases for AI within a tech-heavy company?

Willian:

Build a system that helps you monitor security findings. You can leverage LLM to enrich that finding when there's a detection. You can spend less time on triage and prioritize it faster. That’s the low-hanging fruit for cybersecurity, whether it's a finding from a scanner, a bug bounty program, or pentesting. Collect all the data and summarize the findings.

Code review is another one. You can use LLM to do a first pass to find insecure code.

Carter:

When reviewing code, being very specific will get you much more specific answers. For example, when providing the code to be reviewed, tell the LLM you are concerned about SQL injection on a particular line.

Q: How do you handle licensed open-source software provided by LLMs for a query, especially when the LLMs provide unsourced snippets of code from the web?

Luciano:

When I’m deploying and managing systems, I don’t do something unless it’s blessed by a third party. I’m not a lawyer, but depending on the complexity of the problem you’re trying to solve, the license could be critical to your business or completely irrelevant. When I implement models, I’m conservative about the licenses I choose to use.

Carter:

Github Copilot and OpenAI have copyright indemnification. They accept liability instead of you accepting liability. Of course, check with lawyers, but those solutions give us better confidence about the risk we might be taking when an LLM provides code that might be open source but is not attributed.

Want to learn even more from these AI and ML engineers? Watch the full AI Developers’ AMA webinar.