AI Security Findings Are Growing Faster Than Teams Can Fix Them

In 2025, enterprise AI security programs are facing a paradox: defenders are improving, yet cybersecurity remediation can’t keep pace as vulnerabilities multiply even faster.

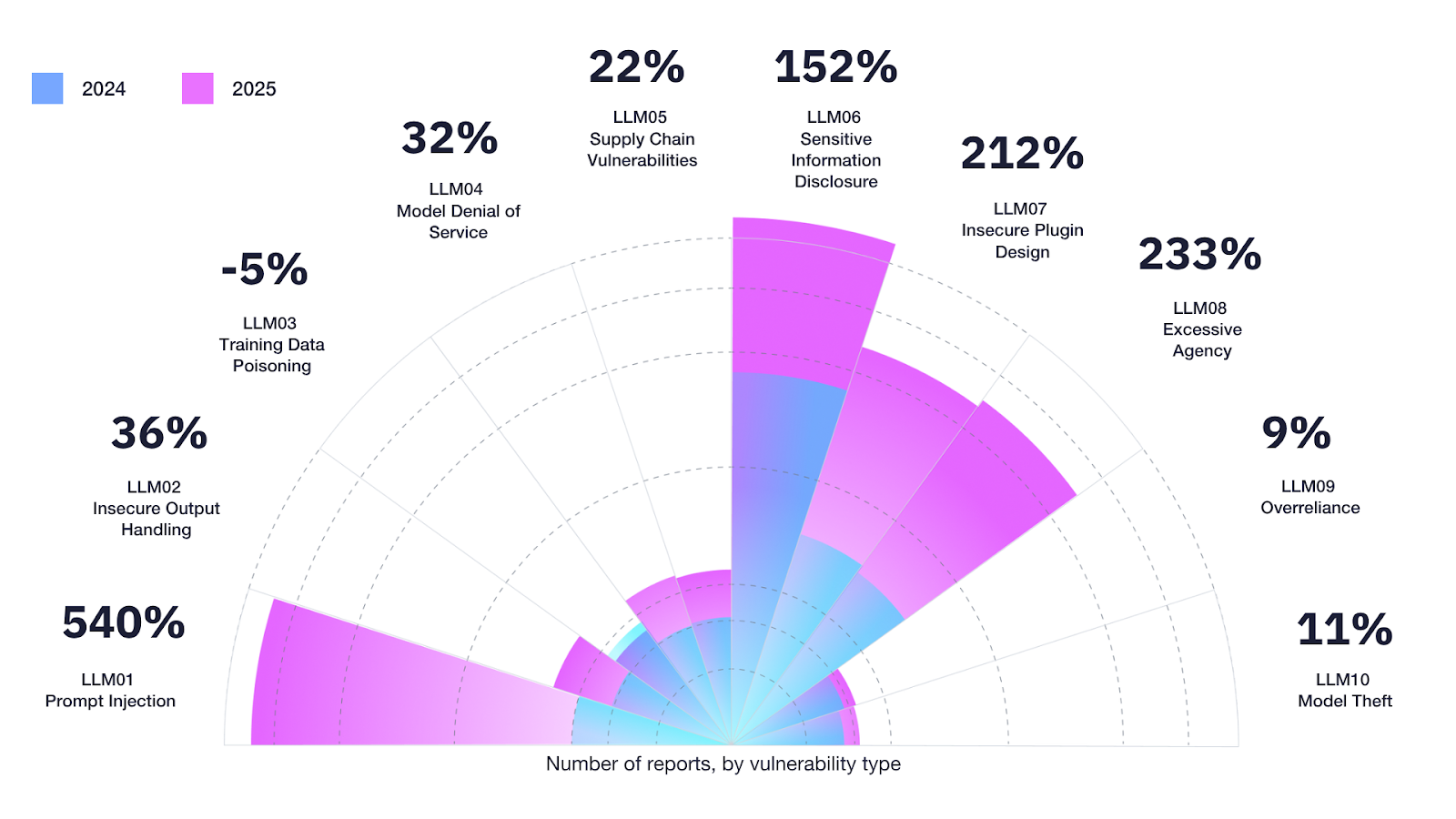

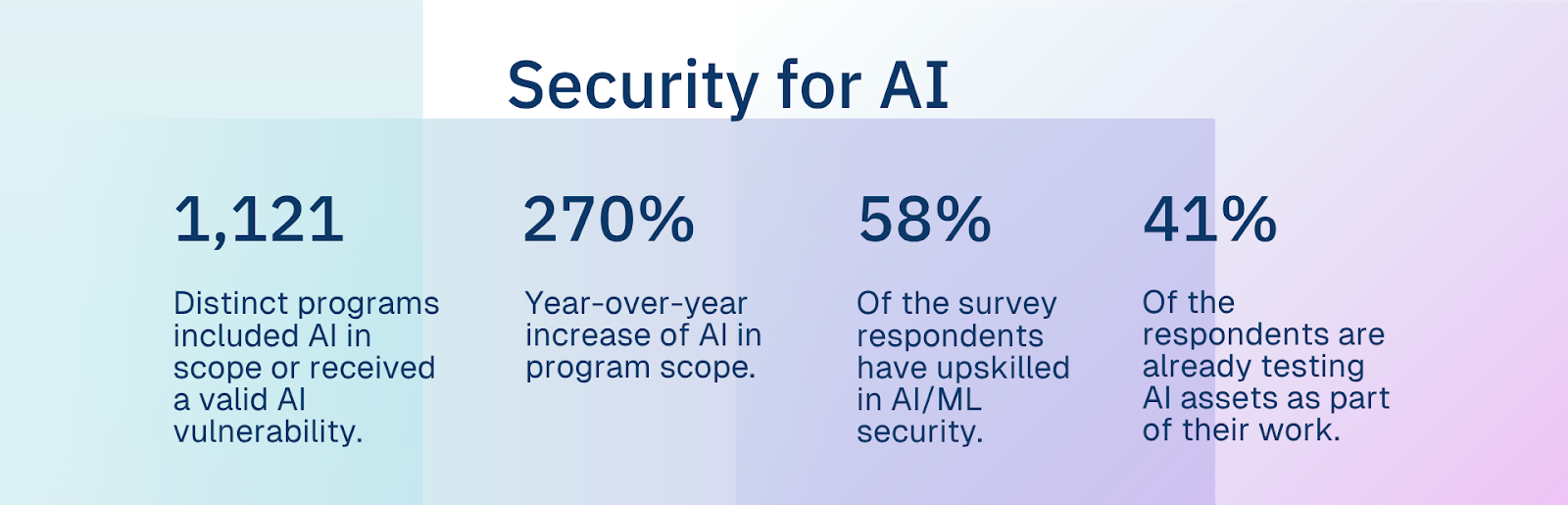

According to the latest Hacker-Powered Security Report*, valid AI-related vulnerability reports have surged 210% year over year, while the number of security researchers focused on AI and machine learning assets has doubled.

For CISOs and security leaders, this signals progress, but it also exposes a growing cybersecurity remediation challenge. Teams are finding more vulnerabilities than they can fix, creating a widening gap between detection and defense.

The Cybersecurity Remediation Bottleneck

As organizations race to embed AI across products, workflows, and customer experiences, their attack surface is expanding faster than ever. Every new model, API, and integration introduces potential exposure points that traditional cybersecurity workflows can’t manage.

The result is a growing backlog of unresolved vulnerabilities. Each unpatched report is a potential exploit, like an open endpoint, a model manipulation risk, or a misconfigured data flow linking AI systems to sensitive assets.

Security teams are overwhelmed by the cybersecurity remediation workload. Thirty-eight percent of organizations lack the in-house resources to manage AI risk effectively, and another third aren’t sure they have the right skills at all.

This backlog is a capacity crisis.

AI-related vulnerabilities demand expertise in model behavior, data pipelines, and emerging exploit techniques. Yet many teams still rely on manual triage and ticketing to manage thousands of findings. That human-dependent process can’t scale as threats like prompt injection and data leakage surge.

The consequence is predictable: unresolved reports pile up, remediation timelines stretch longer, and organizations fall behind their attackers.

When the Queue Becomes the Risk

Every delay in cybersecurity remediation increases exposure. IBM’s Cost of a Data Breach Report 2025 found that 97% of AI-related incidents involved inadequate access controls, showing how often critical vulnerabilities linger before being fixed.

Attackers are leveraging AI at scale by automating reconnaissance, chaining exploits, and identifying weaknesses faster than human teams can review reports. More than three-quarters (78%) of surveyed customers said their concern about AI security has grown in the past year, up from 48% just a year earlier.

See how vulnerability discovery is changing in the Hacker-Powered Security Report 2025

Augmenting Security With AI

There’s no realistic way to scale manual cybersecurity remediation to match the velocity of AI-driven vulnerabilities. The solution isn’t just more people, but smarter processes powered by automation. The organizations moving AI implementations into testing scope illustrate this shift.

How to Operationalize Security for AI

- Unify the threat model. Cover exploitation paths and unsafe behaviors in a single view.

- Pressure-test before and after launch. Run objective-based adversarial exercises; add audit-friendly AI/LLM pentests; sustain coverage with ongoing bounty/VDP as models, prompts, and integrations evolve.

- Instrument governance. Map findings to frameworks (e.g., NIST AI RMF, MITRE ATLAS, EU AI Act), require auditability across prompts, tools, and data flows, and keep humans in the loop for sensitive actions.

- Resource to reality. If your team is stretched (most are), use independent testing to provide board- and regulator-ready assurance while you build internal capacity.

Shift to continuous assurance with Continuous Threat Exposure Management (CTEM)

A Smarter Way to Stay Ahead

The most resilient organizations are embracing adaptive cybersecurity remediation, where AI enhances, not replaces, human decision-making.

The volume of AI-related findings will only grow, but that doesn’t have to mean falling behind. By investing in AI-assisted triage and continuous testing, security leaders can transform remediation from a reactive scramble into a proactive advantage.

In 2025, 90% of HackerOne customers reported using Hai, an agentic AI system that automates triage, filters duplicate reports, and highlights the most urgent risks for human review.

Catch vulnerabilities earlier with HackerOne Code

*Survey methodology: HackerOne and UserEvidence surveyed 99 HackerOne customer representatives between June and August 2025. Respondents represented organizations across industries and maturity levels, including 6% from Fortune 500 companies, 43% from large enterprises, and 31% in executive or senior management roles. In parallel, HackerOne conducted a researcher survey of 1,825 active HackerOne researchers, fielded between July and August 2025. Findings were supplemented with HackerOne platform data from July 1, 2024 to June 30, 2025, covering all active customer programs. Payload analysis: HackerOne also analyzed over 45,000 payload signatures from 23,579 redacted vulnerability reports submitted during the same period.