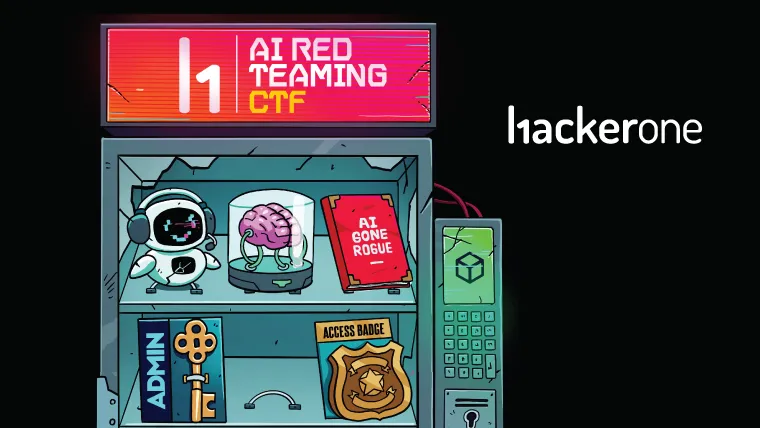

LLMs adversarial testing with HackerOne: Join the CTF!

This blog was co-developed by HackerOne and Hack The Box as part of our AI Red Teaming CTF collaboration.

Large language models (LLMs) and other generative AI systems are changing how we build products, defend networks, and even how attackers operate.

Back at Black Hat, we dropped a live AI red teaming simulation directly at our booth with Hack the Box: three escalating prompt injection challenges, one model locked down with safety guardrails, and a brutal time limit.

Hundreds lined up, some cracked it, most didn’t. But everyone walked away with the same takeaway: LLMs aren’t as predictable as they seem.

Now we’re scaling that energy into something bigger: introducing AI Red Teaming CTF: [ai_gon3_rogu3]; a 10-day adversarial challenge starting in September; designed to be creative, hands-on, and just a little unhinged.

What is AI red teaming (AIRT)?

Traditionally, red teaming means simulating real-world attackers to find security gaps. When it comes to AI red teaming, it becomes all about targeting models, apps, and systems using language, context, and creative misuse.

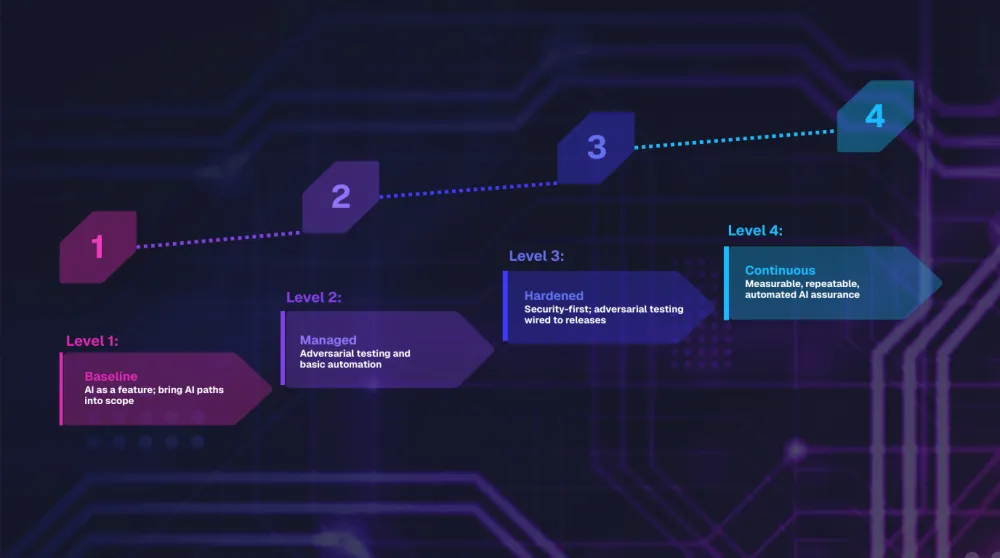

Why do this? We can’t evaluate AI safety by inspection alone. Currently, there are no universally accepted best practices for AI red teaming, and the practice is still “a work in progress.”

LLM red‑teaming doesn’t guarantee safety. It only provides a snapshot of how a system behaves under specific adversarial conditions. To improve model resilience, organizations need to test models under realistic attack scenarios and iterate on their defenses.

The field took off after the first public GenAI Red Teaming event at DEF CON 31, organized by the AI Village, but despite the ongoing buzz, most teams still don’t know where to start.

That’s what this CTF is for.

Built by HackerOne and Hack The Box, this CTF reflects the kinds of misuse paths that are tested for in the real world in most AIRT engagements.

What to expect (without spoiling the fun)

The CTF drops you into scenario-based challenges that feel uncomfortably real. You might find yourself:

- Convincing a model to escalate a fake crisis

- Extracting insider content through indirect prompt manipulation

- Bypassing multi-user access controls via language alone

- Tricking safety filters into letting something slip

The deeper you go, the more the lines blur between prompt, guidelines, and system behavior. And that’s exactly the point. You’ll see just how much of an AI system’s integrity relies on language, and how easy it is to turn that language against it.

Who is this CTF for?

The AI red teaming community is still figuring out best practices, and challenges like [ai_gon3_rogu3] help push the field forward. Whether you’re a veteran researcher or just curious about adversarial AI, bring your creativity and get ready to jailbreak some models.

Getting ready for the event

If this is your first deep dive into AI or ML adversarial testing, you don’t have to go in blind.

You can check our comprehensive guide, “Securing the Future of AI,” to learn about AI security and safety risks, emerging attacker tactics, and real-world case studies. If you are ready to put your skills to practice, HTB Academy recently launched an AI Red Teamer job‑role path in collaboration with Google’s red teamers. The course covers prompt injection techniques, jailbreak strategies, bias exploitation, and other attack vectors—skills closely aligned with what you’ll face in the CTF.

How to join the CTF

- 🛠️ Create your free Hack The Box account

- 🔗 Register for the CTF

- 📅 Save the dates September 9–18!

- 🌍 It’s fully remote, so open to all

- 👕 Limited edition swag for top players

We can’t wait to see what you break and what you learn!