Shopify's Playbook for accelerating secure AI adoption

Why this playbook exists

AI is now embedded across modern engineering and security workflows. But adding AI increases the volume, complexity, and pace of change across your attack surface. Shopify’s security team—handling hundreds of incoming vulnerability reports per week with a lean team—built a scalable model for integrating AI responsibly across their operations without sacrificing rigor and innovation, resulting in 62% faster validation, triage, and communication.

This playbook distills their approach into practical steps you can adapt for deploying AI on an enterprise scale. (Based on “AI Security at Scale” webinar)

Getting Started

What You’ll Learn

- Assessment of how AI changes your attack surface and risk model

- How to embed security controls directly into AI workflows

- Move from one-time AI reviews to continuous AI risk evaluation

What You’ll Need

- Ownership of AI security across engineering and security teams

- AI tooling that supports auditability, access controls, and human approval

- Bug Bounty program

- AI Red Teaming

Overview

Defining the Challenge

AI adoption created a fundamental tension at Shopify, which Jill Moné-Corallo describes clearly: “How are we securing things as fast as AI is allowing us to speed up?”

Solution

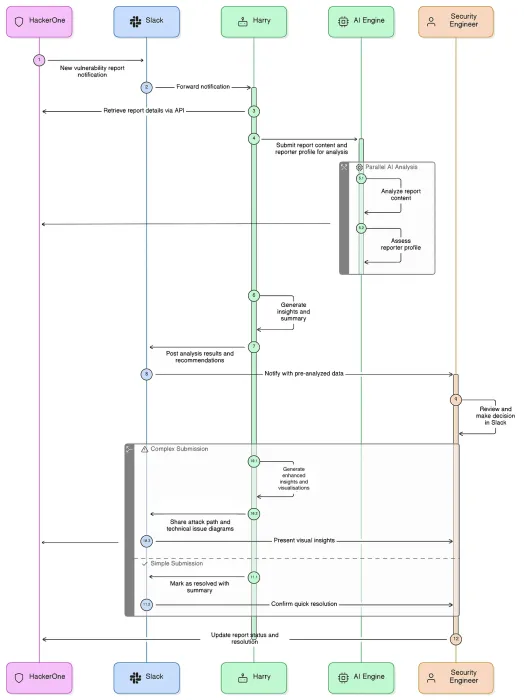

Shopify created their own teammate that remembers every report using Hai and their internal AI agent in tandem.

Expected Results

- Faster and more consistent validation and triage

- Reduced analyst workload and burnout

- Improve onboarding speed for new team members

- Exposure reduction as AI usage scales

How to Accelerate Secure AI Adoption

1. Map how AI changes your attack surface

- Identify workflows using LLM outputs, automated decision agents, prompt-based logic, or autonomous actions. Consider risks specific to AI: prompt injection, hallucination-induced logic flaws, data leakage, and model misuse.

- Track where sensitive data is used in prompts or model fine-tuning.

2. Establish AI governance baselines using frameworks like OWASP Top 10 for LLM Applications and NIST AI RMF. Create internal categories for what data is allowed in prompts, what is prohibited, and where human review must be mandatory.

3. Treat all AI-generated artifacts as untrusted until validated. Build internal security policies for validating AI-generated triage summaries, remediation guidance, or code changes.

1. Keep AI inference inside trusted boundaries

- Run models in isolated, auditable infrastructure.

- Apply encryption-in-use, encryption-in-transit, and encryption-at-rest.

- Validate third-party model providers adhere to your data residency and training rules.

2. Apply strict authentication + authorization for any AI agent that can take actions (e.g., create tickets, query systems, modify configurations).

3. Log all AI-invoked actions at the same granularity as human actions.

4. Train teams on safe prompts.

- Prohibit sensitive data in prompts unless the environment is approved for that sensitivity level.

- Create internal documentation with examples of “safe” vs. “unsafe” prompting.

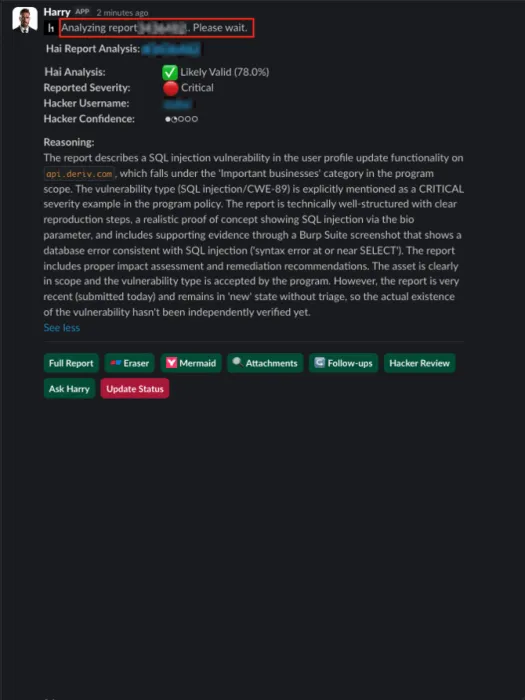

Shopify applied AI to dramatically reduce noise and increase analyst throughput without removing humans from decisions.

1. Deploy AI for first-pass triage, deduplication, and report summarization.

Shopify uses AI to distill verbose reports and highlight the single line that matters: “Our tools were able to distill down: ‘Here’s this one line we should tease out' instead of us spending those man hours.”

Recommended workflows:

- Use Hai’s dedupe agent to cluster similar reports and suppress internal duplicates.

- Auto-identify “insufficient information” cases and generate follow-up queries to researchers.

- Highlight potential exploit paths using model-based reasoning.

2. Use AI to cross-check human assessments

Shopify pairs their internal model with Hai: “We use it to double-check ourselves… to make sure we’ve got a consistent tone or clearer steps.”

Tip: Use two AI models—one institutional, one general-purpose—to compare reasoning outputs and surface inconsistencies.

3. Apply AI to repetitive scoring and impact analysis

Shopify uses Hai as an objective, third-party view to analyze historical scoring decisions and surface inconsistencies in their CVSS-influenced calculator. This is especially useful for teams recalibrating severity models or payout tables.

AI systems introduce new threat classes that traditional testing misses

Shopify’s micro–live hacking event in September 2025 focused entirely on testing Sidekick (their merchant-facing AI assistant) and related AI features.

1. Put AI assets (LLM endpoints, agents, RAG pipelines) in scope for bug bounty and pentesting.

- Define custom payout tables targeting AI-specific vulnerabilities.

2. Recruit specialized AI researchers

Shopify sourced experts from DEF CON’s Bug Bounty Village in addition to their top program performers.

Ways to source AI hackers:

- Tap ML research labs and academia (grad students and postdocs).

- Host AI red-team hackathons, CTFs, or competitions: Run public or invite-only competitions. Quick tip: Provide sandboxes, sample datasets, and API credits to lower friction.

- Run specialized private bounty programs with AI scopes. Quick tip: Add special “AI expert” tiers and invite past top performers from ML competitions.

- Partner with HackerOne AI Red Teaming (or industry AI/red-team consultancies).

- Recruit from ML platforms, OSS model communities, and GitHub.

- Source multilingual and multimodal testers. Quick tip: Run language-tagged mini-programs with tailored rewards.

- Upskill existing security researchers into AI attack roles. Quick tip: Run a paid apprenticeship that culminates in entry to your private AI program.

- Work with model vendors and platform partners. Quick tip: Negotiate joint responsible-disclosure processes and co-branded exercises.

3. Use Live Hacking Events or AI Red Teaming to shape AI security policies

Shopify is using findings from their micro-LHE to write a formal internal and external AI security policy and paytable. But Live Hacking Events are not the only way to go– anyone with AI scope can leverage AI Red Teaming to shape policy too.

AI is not a one-size-fits-all accelerator:

“There is no silver bullet here. Each team must find harmony between automation and manual oversight.” —Nidhi Agarwal, HackerOne Chief Product Officer

1. Customize AI outputs to your team's voice and processes. Shopify’s internal model reflects institutional memory on tone, formatting, scoring practices. Hai provides complementary objectivity and consistency.

2. Build ongoing feedback loops to compare AI output vs human decisions. Use this delta to update prompts, instructions, or guardrails.

3. Integrate AI into your CI/CD, ticketing, and remediation workflows via APIs. HackerOne’s APIs allow:

- Connect to Hai via the API

- Auto-creation of validated tickets in Jira/GitHub

- Triggering scans or retests based on model outputs

- Streaming validated findings to SIEM/SOAR

AI risks change weekly so one-time reviews aren’t enough.

1. Continuously reassess AI behavior as prompts, integrations, or training data change

- Automate regression tests for safety or security behavior.

- Use Bug Bounty or AI Red Teaming for ongoing coverage.

2. Track exposure reduction. Shopify’s gains in speed, onboarding efficiency, and response accuracy translate directly into Return on Mitigation (RoM) outcomes: fewer exploitable vulnerabilities, faster closure, and reduced operational risk.

3. Plan for scale before adoption spikes. Build onboarding docs, prompting guides, and access controls ahead of time and enable AI usage policies that accommodate expansion.