Cut the Noise, Keep the Signal: The Hidden Cost of Duplicate Reports

In the fast-moving world of security testing, noise has become one of the biggest hidden costs. Every week, security teams receive a flood of vulnerability reports, many of them duplicates of issues already logged or fixed.

Sorting through those reports is both tedious and expensive. Each duplicate adds review time, stretches analyst capacity, and delays the validation of new findings that could actually reduce risk. For researchers, duplicate submissions are just as frustrating—they can mean slower responses or missed recognition for valuable work.

The challenge is about visibility. When analysts spend hours re-reviewing the same findings, truly critical vulnerabilities risk getting buried. The result: more bottlenecks, slower remediation, and less trust between researchers and security teams.

The Limits of Manual Deduplication

Duplicate reports look simple from the outside. Two researchers find the same vulnerability, so you match one to the other and close it out. But anyone who has worked with security findings knows how far that is from reality.

Security researchers describe issues in widely different ways. Some write a single sentence. Others provide long, detailed explanations. Some attach screenshots or videos. Others submit multi-step proofs of concept. Even when two reports describe the same underlying vulnerability, the language, structure, and evidence rarely line up. That makes accuracy a persistent challenge because there is no predictable format to rely on.

Speed is just as important. When a researcher submits a report, they want to know immediately whether it is a new finding. Any delay creates friction. For security teams, the impact is similar. Duplicate reports slow down queues, add repetitive work, and reduce overall signal.

Accuracy alone is not enough. Speed alone is not enough. Real value comes from delivering both. This combination is what makes deduplication difficult and also why it matters so much. When you achieve the right balance, the workflow feels almost effortless. When you miss, duplicate handling becomes one of the most frustrating points in intake.

Deduplication With Global Pattern Context

Where traditional validation depends solely on manual review, HackerOne combines human expertise with agentic AI efficiency.

This partnership accelerates validation without losing the nuance of expert judgment for faster recognition of legitimate reports, quicker closure on known issues, and less fatigue for security teams and researchers alike.

The Hai Agentic AI system builds on the world’s largest dataset of validated vulnerabilities. It cross-checks incoming reports against known global patterns, using CWE identifiers, CAPEC categories, payload similarities, and metadata correlations, to identify potential duplicates before they ever hit an analyst’s queue.

The Deduplication Agent:

- Cross-checks against global patterns: CWE, CAPEC, payloads, and metadata.

- Compares report content to highlight meaningful differences and similarities.

- Learns continuously from outcomes, improving precision with every submission.

- Delivers deduplication coverage across 80%+ vulnerability types, with human oversight ensuring fairness and accuracy.

More Focus on Net-New Risk

We built the Deduplication Agent to help teams spend less energy re-litigating known issues and more energy validating the reports that change exposure.

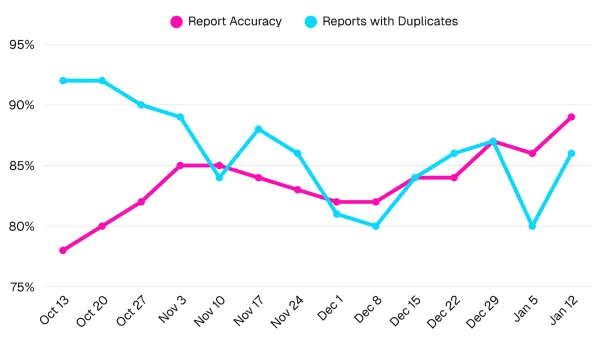

In a high-volume analysis, the agent reduced median deduplication time from 94 seconds to 62 seconds (33 seconds per report). That improvement speeds queue movement and helps teams reach high-priority findings sooner.

Accuracy matters as much as speed. The agent delivered at least one duplicate recommendation on 92% of processed reports, and teams saw 80–95% positive recommendation accuracy with human review in the loop.

The time spent identifying duplicates shrinks as accuracy and coverage improve. The human-in-the-loop still makes the final call, with a confidence score and a quick explanation to keep decisions fast and transparent.

Agentic deduplication turns what used to be an administrative drag into a competitive advantage:

- Efficiency: Analysts reclaim hours once lost to manual duplicate sorting.

- Consistency: Automated coverage means fewer findings slip through the cracks.

- Fairness: Researchers get credit where it’s due, without unnecessary delay.

- Clarity: Less noise in the queue allows focus on real, exploitable risk.

Deduplication is about restoring confidence in the validation process itself. When teams can trust that every finding is unique, verified, and prioritized accurately, they spend less energy second-guessing results and more time improving defenses.

Deduplication That Holds Up Under Volume

Duplicates are unavoidable. Wasting time on them isn’t.

By pairing AI’s precision with human expertise, deduplication becomes faster, fairer, and more consistent. With 80%+ coverage powered by agentic AI and expert oversight, security leaders can trust that only the right signals rise above the noise, helping teams move faster, act smarter, and stay focused on what matters most.