Cyber Reasoning System: The Origin of AI Security and Agentic Defense

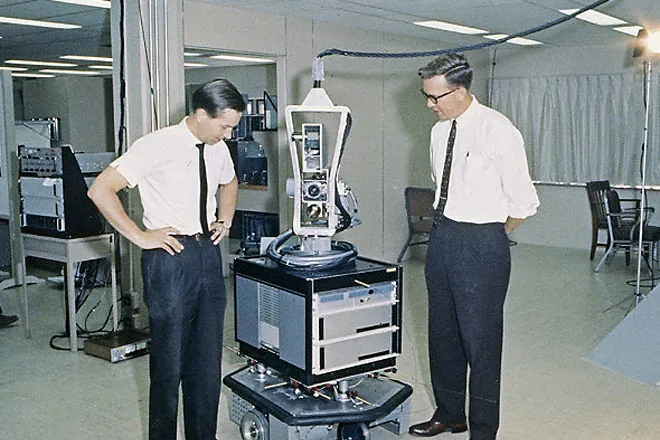

In 1966, long before Agentic AI and LLM’s, a small team funded by DARPA at the Stanford Research Institute introduced Shakey the Robot. A wobbly, camera-mounted machine capable of perceiving its surroundings, reason about its observations and make its own decisions. Shakey wasn’t fast or graceful, however, it was groundbreaking for both robotics and artificial intelligence research. It was the first robot able to reason with its inputs and act autonomously.

By the 1980’s, the same idea that machines could one day reason and act in an intelligent and autonomous manner progressed. Researchers began to explore how computers may be able to reason about software, not just execute it. Projects in symbolic execution and automated reasoning pushed computers to analyze logic, detect inconsistencies, and verify program behaviour. These were early glimpses of software understanding itself.

For years, the concept bordered on science fiction. The notion that machines could autonomously reason and self heal seemed implausible. How could a deterministic system ever adapt to something as dynamic and unpredictable as the internet?

Over the following decades, industry researchers began to explore the idea of self-managing and self-repairing systems. The late 1990s and early 2000s saw initiatives like IBM’s Autonomic Computing, which proposed software that could configure, heal, optimize, and protect itself. Academic projects such as Process Homeostasis experimented with autonomous fault detection and response, inspired by human biological immune responses.

Later, projects such as Repairnator demonstrated that machines could evolve and test code repairs entirely on their own. These experiments blurred the line between vulnerability detection and active defense, hinting at a future where software could repair itself in real time.

The Birth of the Cyber Reasoning System

The building blocks were there: symbolic reasoning, fuzzing, and automated patching. Yet they remained isolated, each solving part of the problem. The concept of the Cyber Reasoning System (CRS) emerged from the effort to unify them. An architecture where machines could find, exploit, and patch vulnerabilities without human input.

A CRS was envisioned as a fully autonomous system capable of performing the entire vulnerability lifecycle:

- Discovering flaws through automated analysis and fuzzing

- Validating them by crafting proof-of-concept exploits

- Repairing them with self-generated patches

- Deploying patches while maintaining functionality

The CRS concept combined the analytical reasoning of symbolic execution with the adaptability of automated patching and the speed of fuzzing. The result was a feedback loop that could continuously analyse, learn, and heal. A closed system that could both attack and defend in real time.

When DARPA launched the Cyber Grand Challenge over 10 years ago in 2014, it became the first large-scale attempt to bring the Cyber Reasoning System (CRS) concept to life. Competing teams built autonomous systems that scanned binaries, detected vulnerabilities, generated exploits, and patched them within seconds. All without human guidance. For the first time, machines were reasoning about code, not just running it.

Although the challenge proved that autonomous cyber defence was achievable, these early systems were still deterministic. They operated in fixed pipelines and closed loops, limited to static binaries rather than the dynamic, context-rich environments of modern networks. CRS could think fast, but not flexibly.

The Rise of Agentic AI

Today, that limitation has begun to disappear. The rise of Agentic AI, large language models (LLMs), and the Model Context Protocol (MCP) has given machines the ability to reason more like humans; context-aware, goal-driven, and adaptive. The closed-loop intelligence of CRS has evolved into something open ended and conversational.

As Agentic AI systems mature, they are extending the ideas first proven by Cyber Reasoning Systems far beyond vulnerability research. Machines are no longer reasoning solely about code. They are reasoning about intent, risk, and defense itself.

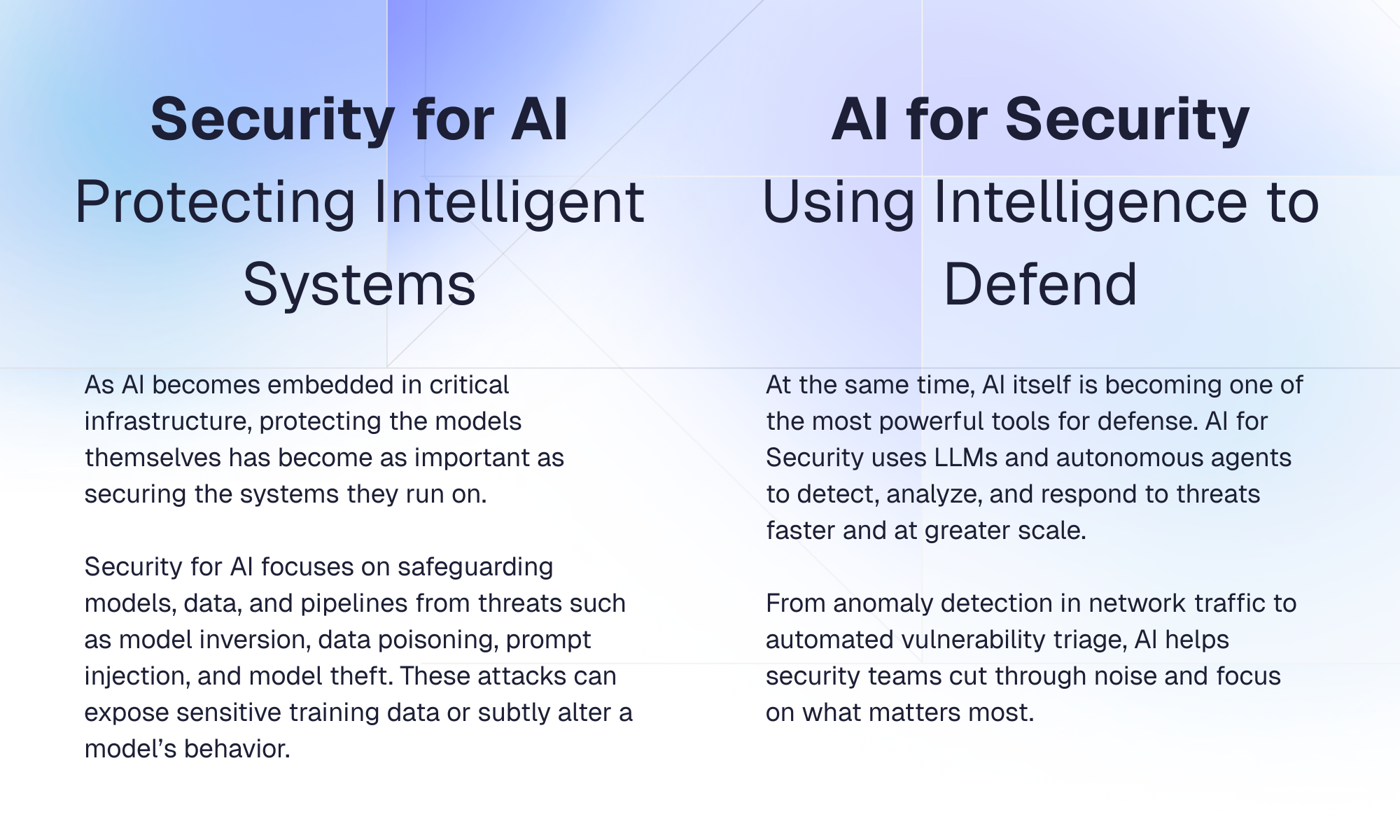

This shift has created a new dual focus for cybersecurity. On one side, we must now secure AI systems themselves. On the other hand, we are beginning to use AI as an active defender.

Together, they close the loop that began decades ago. As AI learns not only to defend the digital world but also to defend itself.

When combined with human expertise, AI becomes a force multiplier, automating the repetitive work so defenders can concentrate on strategic, high-impact decisions. In many ways, AI for Security completes the loop that began with DARPA’s Cyber Reasoning Systems: machines reasoning not only about code, but about threats, risks, and defense strategy.

Learn how HackerOne helps enterprises operationalize AI security testing

The Next Era of Cyber Reasoning

The next chapter points toward self-healing infrastructure. Digital ecosystems capable of detecting, reasoning, and repairing themselves without waiting for human intervention. As Agentic AI systems become more interconnected, the traditional boundaries between organizations, networks, and data begin to blur. These agents will communicate and collaborate across environments, making the concept of a single “perimeter” increasingly obsolete.

In this world, even the idea of identity as the new perimeter may fade. Security and Agentic AI will need to be dynamic, continuous, and context-driven, with zero trust as their backbone. Every user, device, and process will be verified at each step because everything will be interconnected.

The same autonomous reasoning that once powered DARPA’s Cyber Reasoning Systems is now evolving into a distributed web of Agentic AI. capable of maintaining, defending, and ultimately healing the digital fabric it inhabits.

"Right now, we are in a pivotal moment for cybersecurity. We are on the brink of creating true Cyber Reasoning Systems, made possible by recent AI developments. The question on everybody's lips is: will AI replace us, or augment us?"

—Luke Stephens, Director at HackerContent

The 9th Hacker-Powered Security Report revealed that only 12% of researchers think AI will replace human hackers. Most see the future in collaboration. A balance between human intuition and machine precision. It’s a reminder that what began with DARPA’s early autonomous systems has matured into a true partnership between human creativity and machine reasoning.

Now that we have the necessary pieces, it’s a race to put them together. Innovative startups are already competing to build the first truly autonomous cybersecurity reasoning systems. Machines that can detect, decide, and defend in real time.

Nearly 60 years after Shakey the Robot first learned to reason about its world, that same spark has evolved into intelligent systems defending the digital realm.

What was once an academic dream is now an operational reality.

Explore how Hai’s intelligent agents identify, prioritize, and mitigate risks in real time