AI Security Testing Is the Maturity Milestone for Modern Organizations

AI moved quickly from boardroom talking points to systems that impact real customers, real data, and real business outcomes. And real vulnerabilities.

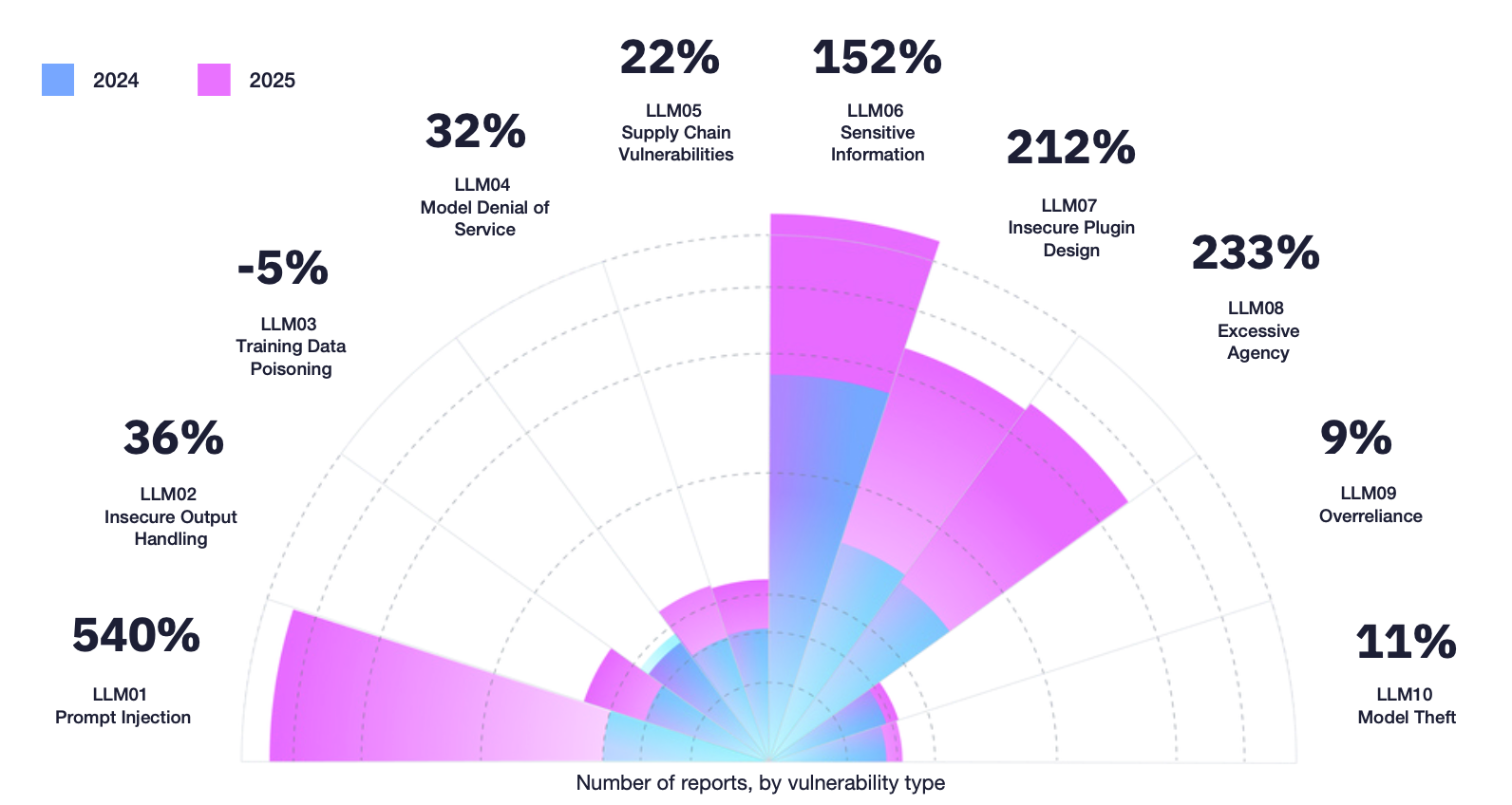

Our latest research revealed that valid AI-related security reports more than tripled since 2024.*

Organizations deploying AI at scale are learning a hard truth: AI expands opportunity as well as exposure. And the moment your AI systems begin influencing decisions or interacting with users, your approach to risk must mature.

That’s where AI security testing becomes the turning point.

How AI Is Reshaping the Exposure Landscape

AI systems introduce security failures that do not follow fixed execution paths. As models interpret prompts, retrieve data, and invoke tools, attackers are exploiting behavioral and orchestration weaknesses that traditional application testing was never designed to detect. This shift is increasingly reflected in the types of security reports researchers submit.

The AI vulnerability data from the most recent Hacker-Powered Security Report shows rapid growth in prompt injection, insecure plugin and tool design, excessive model agency, and exposure of sensitive information. These issues emerge from how AI systems reason and act, rather than from classic client-side or request-based flaws.

Organizations in the early stages of AI adoption often see limited impact, as deployments are small or constrained. But as AI systems gain autonomy, access internal data, or interact with users at scale, these AI-specific risks become operational and materially change the exposure landscape.

“What we’re seeing in the data is already playing out in the real world. Recent AI security incidents, including the Claude jailbreaks and Shai-Hulud 2.0, exposed failures in prompt control, tool boundaries, and model agency. These same weakness patterns now dominate valid AI security reports, reinforcing why security for AI has become a maturity requirement as organizations scale AI into real user and decision-making workflows.”

—Naz Bozdemir, Lead Product Researcher at HackerOne

The Gap Between Early AI Adopters and AI-Mature Enterprises

Organizations at earlier stages of AI adoption tend to concentrate on limited deployments, such as pilots, internal tools, or narrowly scoped features. These implementations often rely on existing application security controls, as AI components are introduced incrementally and operate within constrained environments.

More AI-mature enterprises operate at a different scale. They have multiple models or agents in production, expose AI functionality to external users, or integrate AI systems directly into core SaaS workflows. At this stage, AI behavior, data access, and tool interaction become material to overall system risk, and security considerations shift accordingly.

As organizations’ AI implementations become more mature and more widely deployed, the risks surface with greater frequency, visibility, and impact. Teams begin to encounter:

- Unexpected unsafe outputs

- Prompt injection attempts

- Sensitive information disclosure

- RAG manipulation and data poisoning

- High-impact failures that traditional QA was never designed to predict

When these risks surface in live environments, leaders recognize they need more than policy, training, or basic guardrails. They need validation.

AI Security Testing Is the Signal of AI Maturity

As organizations introduce more AI workloads into production, risks shift from theoretical to operational. Matching the level of AI in use with proven security testing levels forms a stable foundation of AI trust, where both AI security and safety are considered and secured.

Gartner reports that AI security testing will be constrained to organizations moving into production with a greater number of AI workloads rather than those that are experimenting and in early stages of deployment. But the rate of adoption will quickly increase.

“The growth rate for AI security testing is fast because of the significant potential reputational, regulatory and other damage that can occur from AI models, applications and agents. Organizations will require assurances that use of generative AI, particularly for externally facing automated chatbots and other AI-enabled services, will respond predictably and not create security and privacy exposures.”

—Gartner® Emerging Tech Impact Radar: AI Cybersecurity Ecosystem

Mature organizations adopt AI security testing because they can no longer guess. They need proof. This is when organizations can align their AI use with the appropriate AI security level:

Hardened and continuous AI security testing becomes the milestone where teams move from "building fast" to "building responsibly." It’s a sign they’re ready to scale, ready to harden their systems, and ready to treat AI with the same seriousness as any critical technology.

What “Good” Looks Like for Mature AI Security Programs

Organizations reaching these mature stages of AI security tend to introduce a layered approach that includes:

- Standing AI assurance programs that combine threat modeling, continuous testing (including model-level), and synthetic adversarial traffic to surface real failure modes.

- Regression and policy evidence suites are maintained as versioned defensibility artifacts, mapping controls, tests, and outcomes over time.

- Change management with guardrails such as canarying, staged rollouts, and auto-rollback for prompts, models, and tools.

- Executive-ready reporting with trend dashboards and KPIs or SLOs that track AI safety, security, and reliability.

Rather than relying on assumptions, these mature AI security methods treat AI behavior as an attack surface that requires ongoing testing, measurement, and refinement.

The New Baseline for Responsible AI Security

As AI becomes embedded in every business function, security expectations will rise. Regulations will demand independent validation, boards will expect evidence-based assurance, and customers will gravitate toward organizations that demonstrate innovation and safety.

AI security testing is becoming the maturity milestone that separates organizations experimenting with AI from those ready to operationalize it responsibly.

Download the AI Security Maturity & Readiness Checklist

*Hacker-Powered Security Report 2025: The Rise of the Bionic Hacker

Survey methodology: HackerOne and UserEvidence surveyed 99 HackerOne customer representatives between June and August 2025. Respondents represented organizations across industries and maturity levels, including 6% from Fortune 500 companies, 43% from large enterprises, and 31% in executive or senior management roles. In parallel, HackerOne conducted a researcher survey of 1,825 active HackerOne researchers, fielded between July and August 2025. Findings were supplemented with HackerOne platform data from July 1, 2024 to June 30, 2025, covering all active customer programs. Payload analysis: HackerOne also analyzed over 45,000 payload signatures from 23,579 redacted vulnerability reports submitted during the same period.

Gartner, Emerging Tech Impact Radar: AI Cybersecurity Ecosystem, Mark Wah, David Senf, Alfredo Ramirez IV, Tarun Rohilla, 17 October 2025

Gartner is a trademark of Gartner, Inc. and/or its affiliates. Gartner does not endorse any company, vendor, product or service depicted in its publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner publications consist of the opinions of Gartner’s business and technology insights organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this publication, including any warranties of merchantability or fitness for a particular purpose.