A Guide To Subdomain Takeovers

HackerOne's Hacktivity feed — a curated feed of publicly-disclosed reports — has seen its fair share of subdomain takeover reports. Since Detectify's fantastic series on subdomain takeovers, the bug bounty industry has seen a rapid influx of reports concerning this type of issue. The basic premise of a subdomain takeover is a host that points to a particular service not currently in use, which an adversary can use to serve content on the vulnerable subdomain by setting up an account on the third-party service. As a hacker and a security analyst, I deal with this type of issue on a daily basis. My goal today is to create an overall guide to understanding, finding, exploiting, and reporting subdomain misconfigurations. This article assumes that the reader has a basic understanding of the Domain Name System (DNS) and knows how to set up a subdomain.

Introduction to subdomain takeovers

If you have never performed a subdomain takeover before or would like a fresh introduction, I have devised an example scenario to help explain the basics. For this scenario, let us assume that example.com is the target and that the team running example.com have a bug bounty programme. While enumerating all of the subdomains belonging to example.com — a process that we will explore later — a hacker stumbles across subdomain.example.com, a subdomain pointing to GitHub pages. We can determine this by reviewing the subdomain's DNS records; in this example, subdomain.example.com has multiple A records pointing to GitHub's dedicated IP addresses for custom pages.

$ host subdomain.example.com |

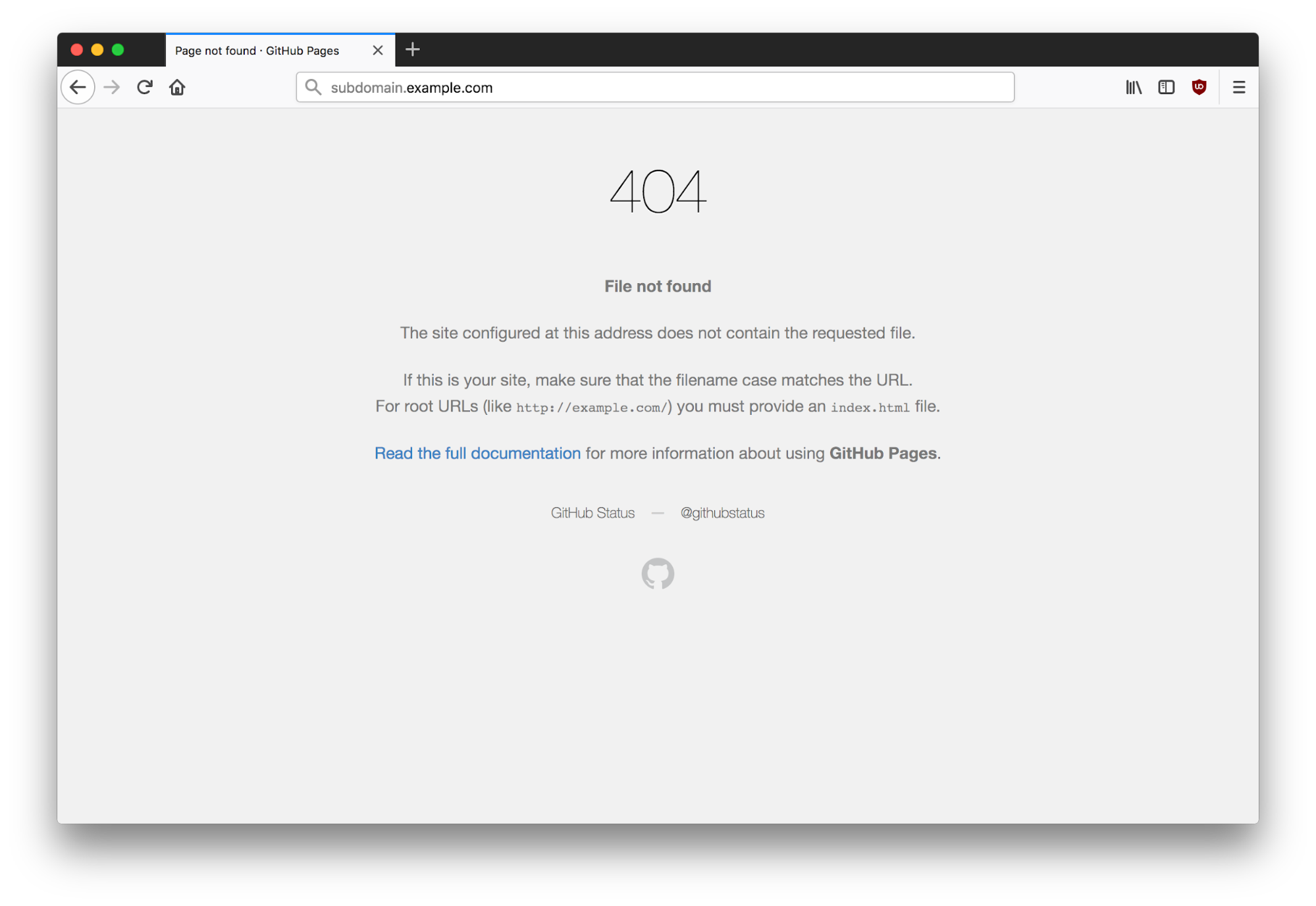

When navigating to subdomain.example.com, we discover the following 404 error page.

Most hackers' senses start tingling at this point. This 404 page indicates that no content is being served under the top-level directory and that we should attempt to add this subdomain to our personal GitHub repository. Please note that this does not indicate that a takeover is possible on all applications. Some application types require you to check both HTTP and HTTPS responses for takeovers and others may not be vulnerable at all.

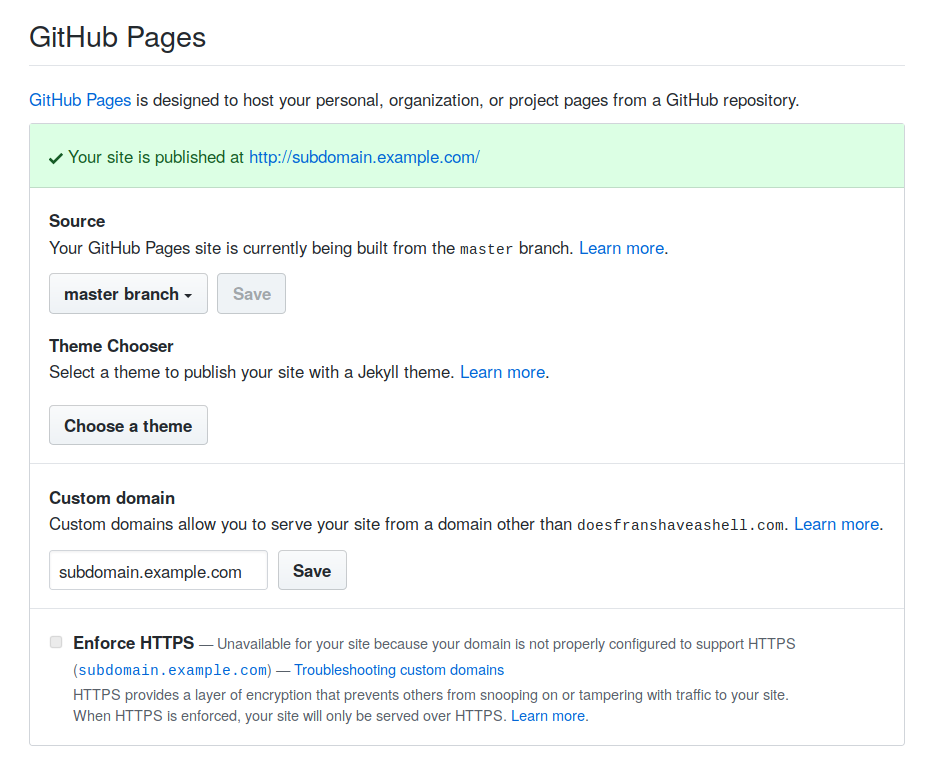

Once the custom subdomain has been added to our GitHub project, we can see that the contents of the repository are served on subdomain.example.com — we have successfully claimed the subdomain. For demonstration purposes, the index page now displays a picture of a frog.

Second-order subdomain takeovers

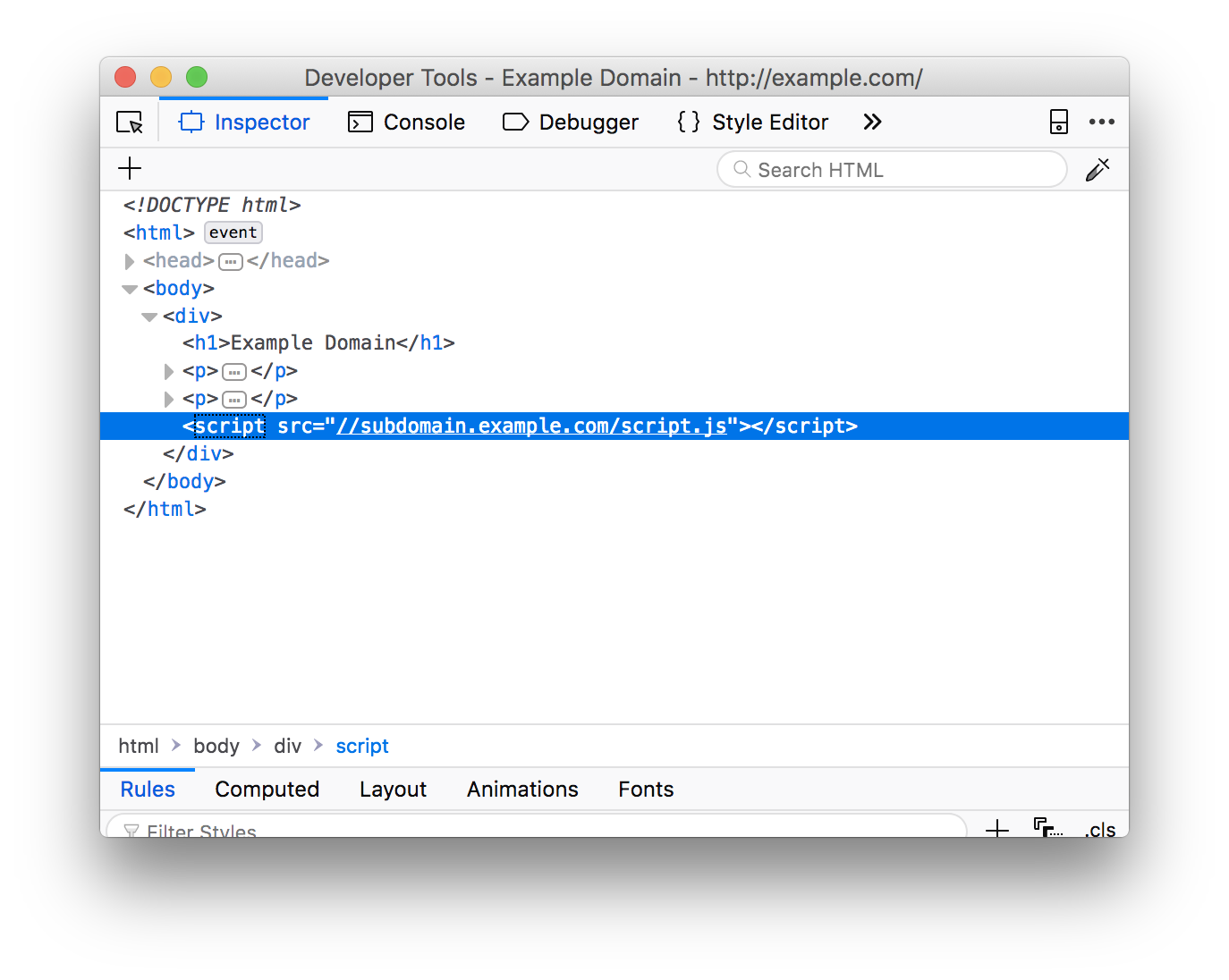

Second-order subdomain takeovers, what I like to refer to as "broken link hijacking", are vulnerable subdomains which do not necessarily belong to the target but are used to serve content on the target's website. This means that a resource is being imported on the target page, for example, via a blob of JavaScript and the hacker can claim the subdomain from which the resource is being imported. Hijacking a host that is used somewhere on the page can ultimately lead to stored cross-site scripting, since the adversary can load arbitrary client-side code on the target page. The reason why I wanted to list this issue in this guide, is to highlight the fact that, as a hacker, I do not want to only restrict myself to subdomains on the target host. You can easily expand your scope by inspecting source code and mapping out all the hosts that the target relies on.

This is also the reason why, if you manage to hijack a subdomain, it is worth investing time to see if any pages import assets from your subdomain.

Subdomain enumeration and discovery

Now that we have a high-level overview of what it takes to serve content on a misconfigured subdomain, the next step is to grasp the huge variety of techniques, tricks, and tools used to find vulnerable subdomains.

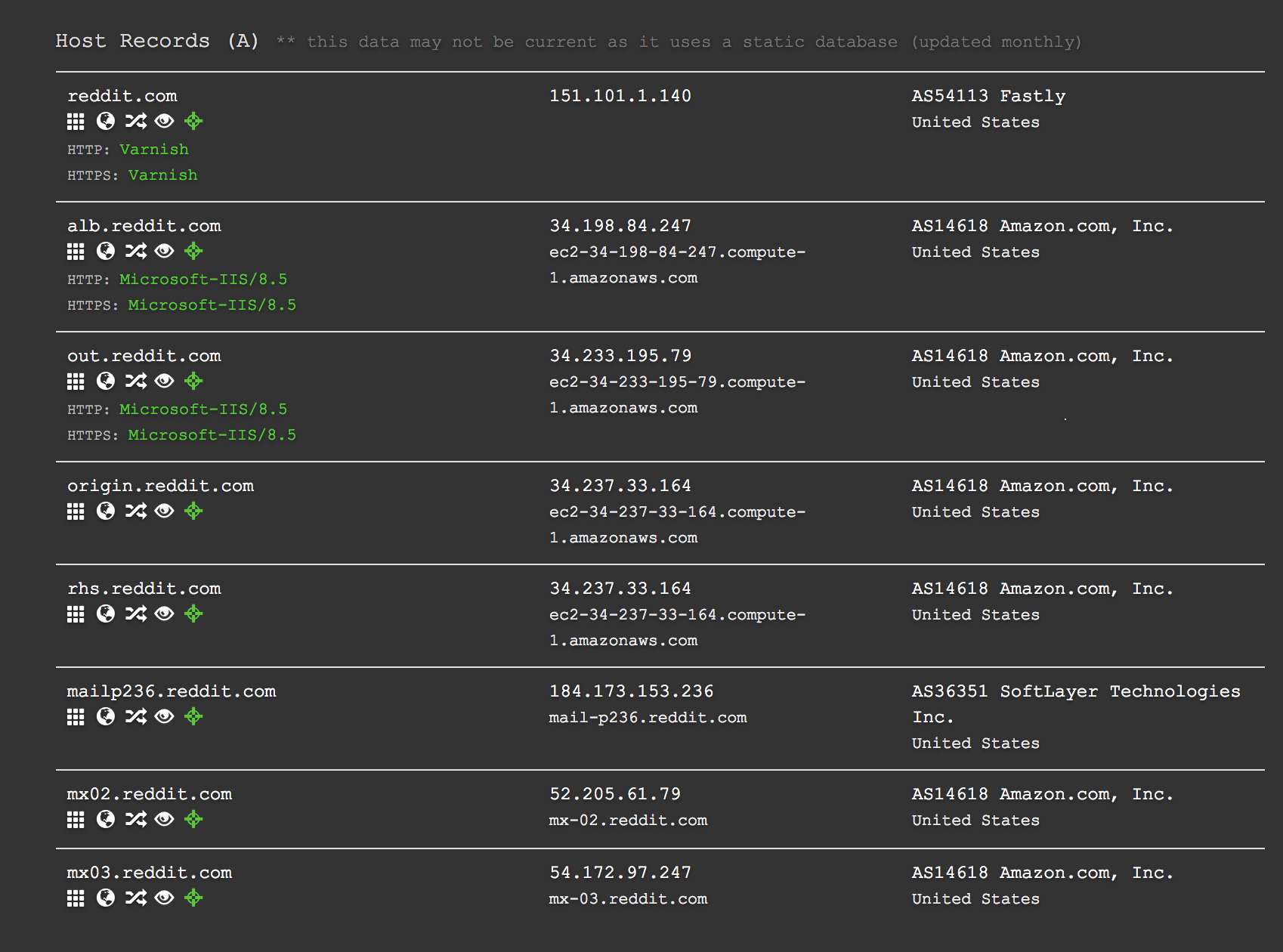

Before diving right in, we must first differentiate between scraping and brute forcing, as both of these processes can help you discover subdomains, but can have different results. Scraping is a passive reconnaissance technique whereby one uses external services and sources to gather subdomains belonging to a specific host. Some services, such as DNS Dumpster and VirusTotal, index subdomains that have been crawled in the past allowing you to collect and sort the results quickly without much effort.

Results for subdomains belonging to reddit.com on DNS Dumpster.

Scraping does not only consist of using indexing pages, remember to check the target’s GIT repositories, Content Security Policy headers, source code, issue trackers, etc. The list of sources is endless, and I constantly discover new methods for increasing my results. Often you will find that the more peculiar your technique is, the more likely you will end up finding something that nobody else has come across; so be creative and test your ideas in practice against vulnerability disclosure programmes.

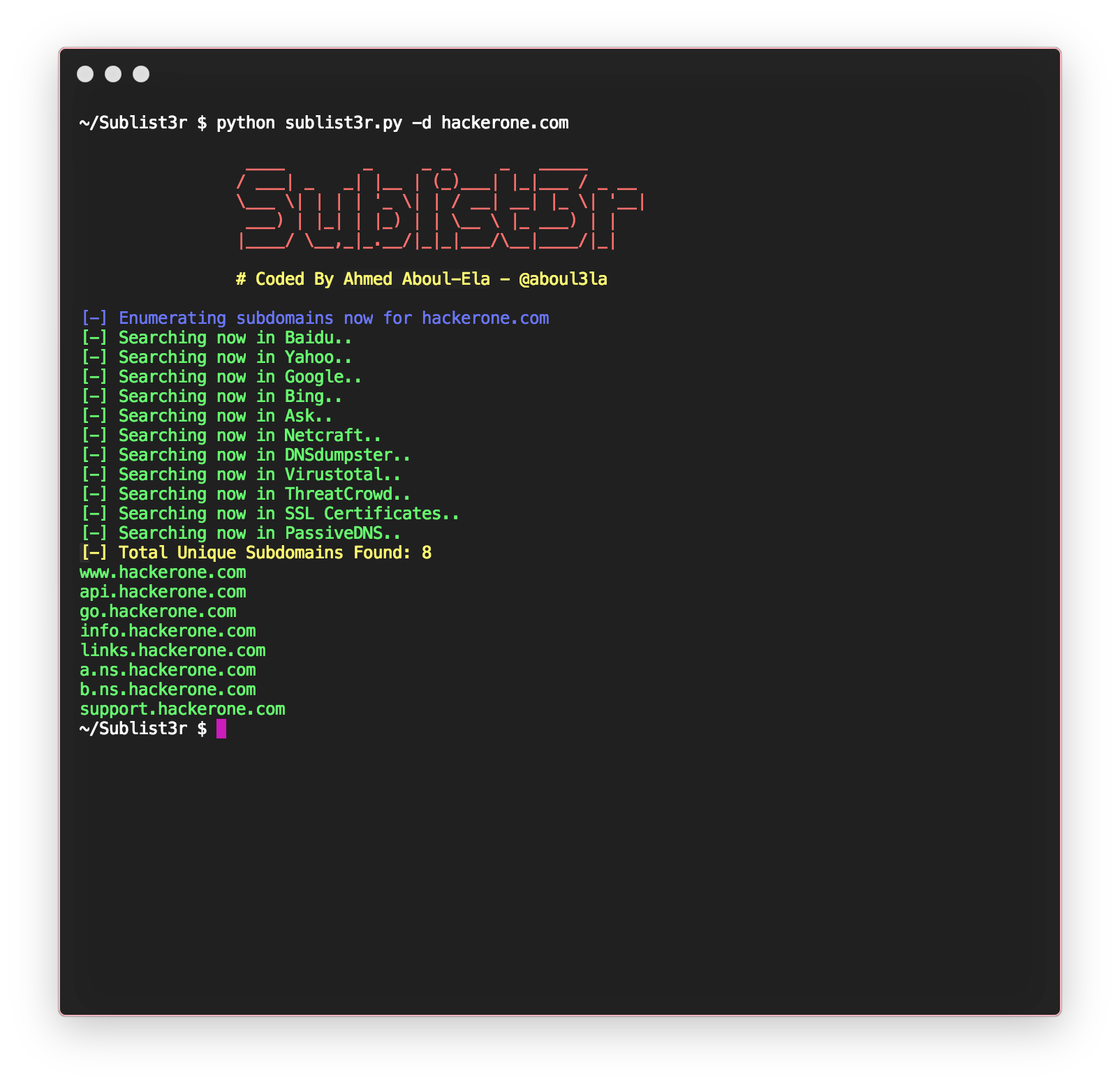

Sublist3r by Ahmed Aboul-Ela is arguably the simplest subdomain scraping tool that comes to mind. This light-weight Python script gathers subdomains from numerous search engines, SSL certificates, and websites such as DNS Dumpster. The set-up process on my personal machine was as straightforward as:

$ git clone https://github.com/aboul3la/Sublist3r.git |

When brute forcing subdomains, the hacker iterates through a wordlist and based on the response can determine whether or not the host is valid. Please note, that it is very important to always check if the target has a wildcard enabled, otherwise you will end up with a lot of false-positives. Wildcards simply mean that all subdomains will return a response which skews your results. You can easily detect wildcards by requesting a seemingly random hostname that the target most probably has not set up.

$ host randomifje8z193hf8jafvh7g4q79gh274.example.com |

For the best results while brute forcing subdomains, I suggest creating your own personal wordlist with terms that you have come across in the past or that are commonly linked to services that you are interested in. For example, I often find myself looking for hosts containing the keywords "jira" and "git" because I sometimes find vulnerable Atlassian Jira and GIT instances.

If you are planning on brute forcing subdomains, I highly recommend taking a look at Jason Haddix's word list. Jason went to all the trouble of merging lists from subdomain discovery tools into one extensive list.

Fingerprinting

To increase your results when it comes to finding subdomains, no matter if you are scraping or brute forcing, one can use a technique called fingerprinting. Fingerprinting allows you to create a custom word list for your target and can reveal assets belonging to the target that you would not find using a generic word list.

Notable tools

There is a wide variety of tools out there for subdomain takeovers. This section contains some notable ones that have not been mentioned so far.

Altdns

In order to recursively brute force subdomains, take a look at Shubham Shah's Altdns script. Running your custom word list after fingerprinting a target through Altdns can be extremely rewarding. I like to use Altdns to generate word lists to then run through other tools.

Commonspeak

Yet another tool by Shubham, Commonspeak is a tool to generate word lists using Google's BigQuery. The goal is to generate word lists that reflect current trends, which is particularly important in a day and age where technology is rapidly evolving. It is worth reading https://pentester.io/commonspeak-bigquery-wordlists/ if you want to get a better understanding of how this tool works and where it gathers keywords from.

SubFinder

A tool that combines both scraping and brute forcing beautifully is SubFinder. I have found myself using SubFinder more than Sublist3r now as my general-purpose subdomain discovery tool. In order to get better results, make sure to include API keys for the various services that SubFinder scrapes to find subdomains.

Massdns

Massdns is a blazing fast subdomain enumeration tool. What would take a quarter of an hour with some tools, Massdns can complete in a minute. Please note, if you are planning on running Massdns, make sure you provide it with a list of valid resolvers. Take a look at https://public-dns.info/nameservers.txt, play around with the resolvers, and see which ones return the best results. If you do not update your list of resolvers, you will end up with a lot of false-positives.

$ ./scripts/subbrute.py lists/names.txt example.com | ./bin/massdns -r lists/resolvers.txt -t A -o S -w results.txt |

Automating your workflow

When hunting for subdomain takeovers, automation is key. The top bug bounty hunters constantly monitor targets for changes and continuously have an eye on every single subdomain that they can find. For this guide, I do not believe it is necessary to focus on monitoring setups. Instead, I want to stick to simple tricks that can save you time and can be easily automated.

The first task that I love automating is filtering out live subdomains from a list of hosts. When scraping for subdomains, some results will be outdated and no longer reachable; therefore, we need to determine which hosts are live. Please keep in mind, as we will see later, just because a host does not resolve, does not necessarily mean it cannot be hijacked. This task can easily be accomplished by using the host command — a subdomain that is no longer live will return an error.

while read subdomain; do |

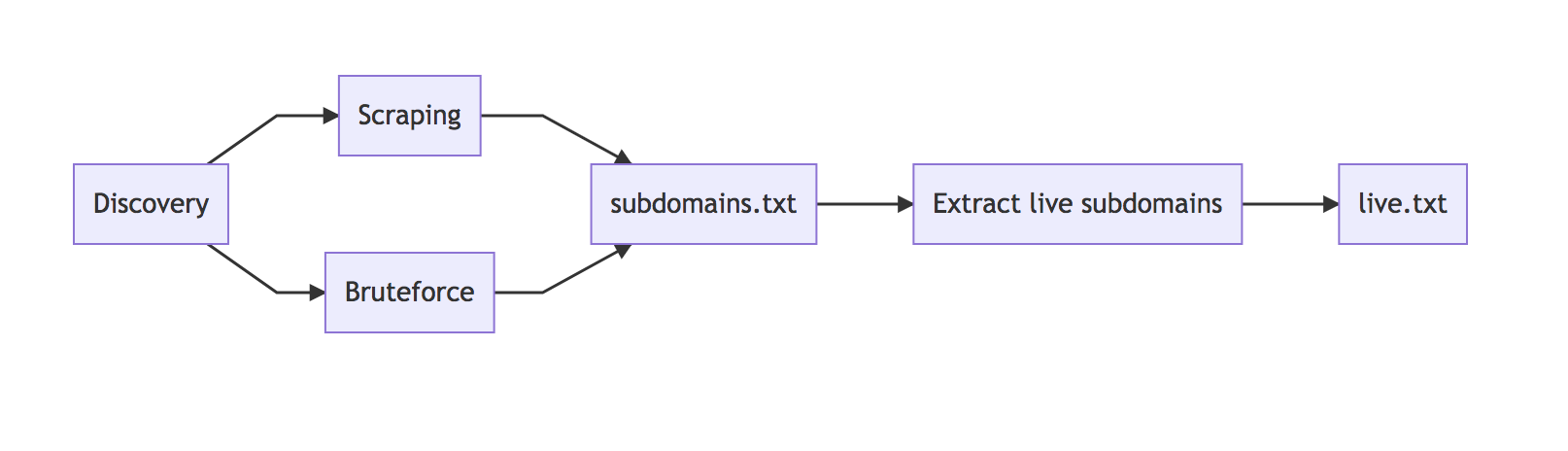

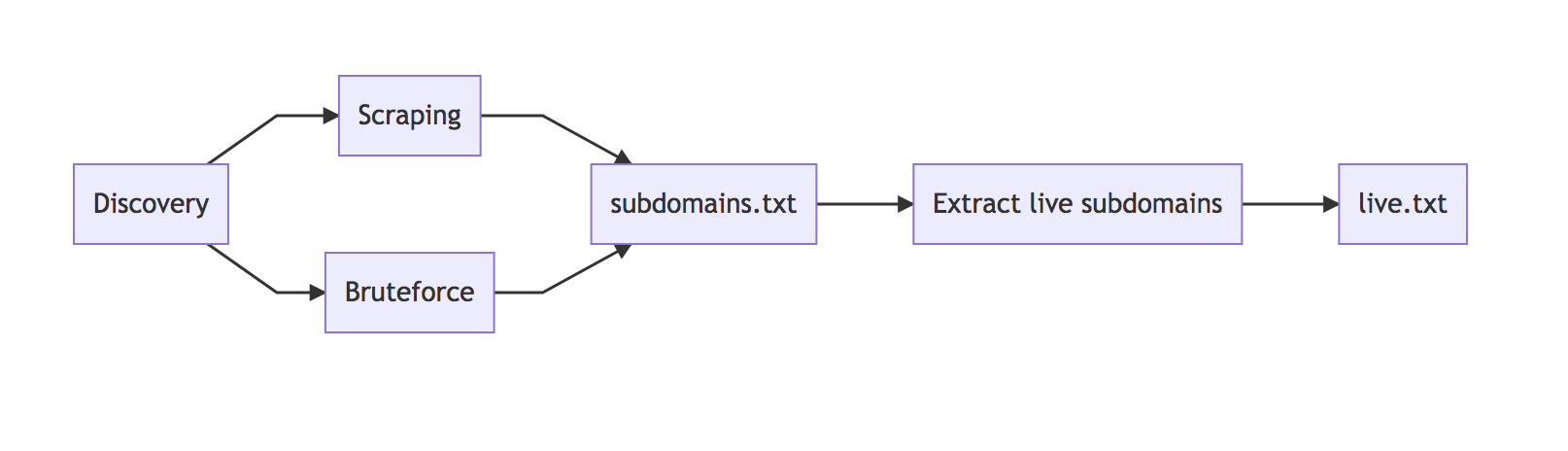

So if we put everything that we have so far together, we end up with the following workflow.

The next step is to get an overview of the various subdomains. There are two options: The first is to run a screenshot script across all subdomains; the second one requires storing the contents of the page in a text file.

For taking screenshots, my go-to tool is currently EyeWitness. This tool generates an HTML document containing all the screenshots, response bodies, and headers from your list of hosts.

$ ./EyeWitness -f live.txt -d out --headless |

EyeWitness can be a little too heavy for some cases and you might only want to store the page's contents via a simple GET request to the top-level directory of the subdomain. For cases like these, I use Tom Hudson's meg. meg sends requests concurrently and then store the output into plain-text files. This makes it a very efficient and light-weight solution for sieving through your subdomains and allows you to grep for keywords.

$ meg -d 10 -c 200 / live.txt |

Special cases

There is a special case that we need to look out for, one that Frans Rosén highlighted in his talk "DNS hijacking using cloud providers – No verification needed". Whenever you encounter dead DNS records, do not just assume that you cannot hijack that subdomain. As Frans points out, the host command might return an error, but running dig will unveil the dead records.

Exploitation

Right, now you control a subdomain belonging to the target, what can you do next? When determining plausible attack scenarios with a misconfigured subdomain, it is crucial to understand how the subdomain interacts with the base name and the target's core service.

Cookies

subdomain.example.com can modify cookies scoped to example.com. This is important to remember as this could potentially allow you to hijack a victim's session on the base name.

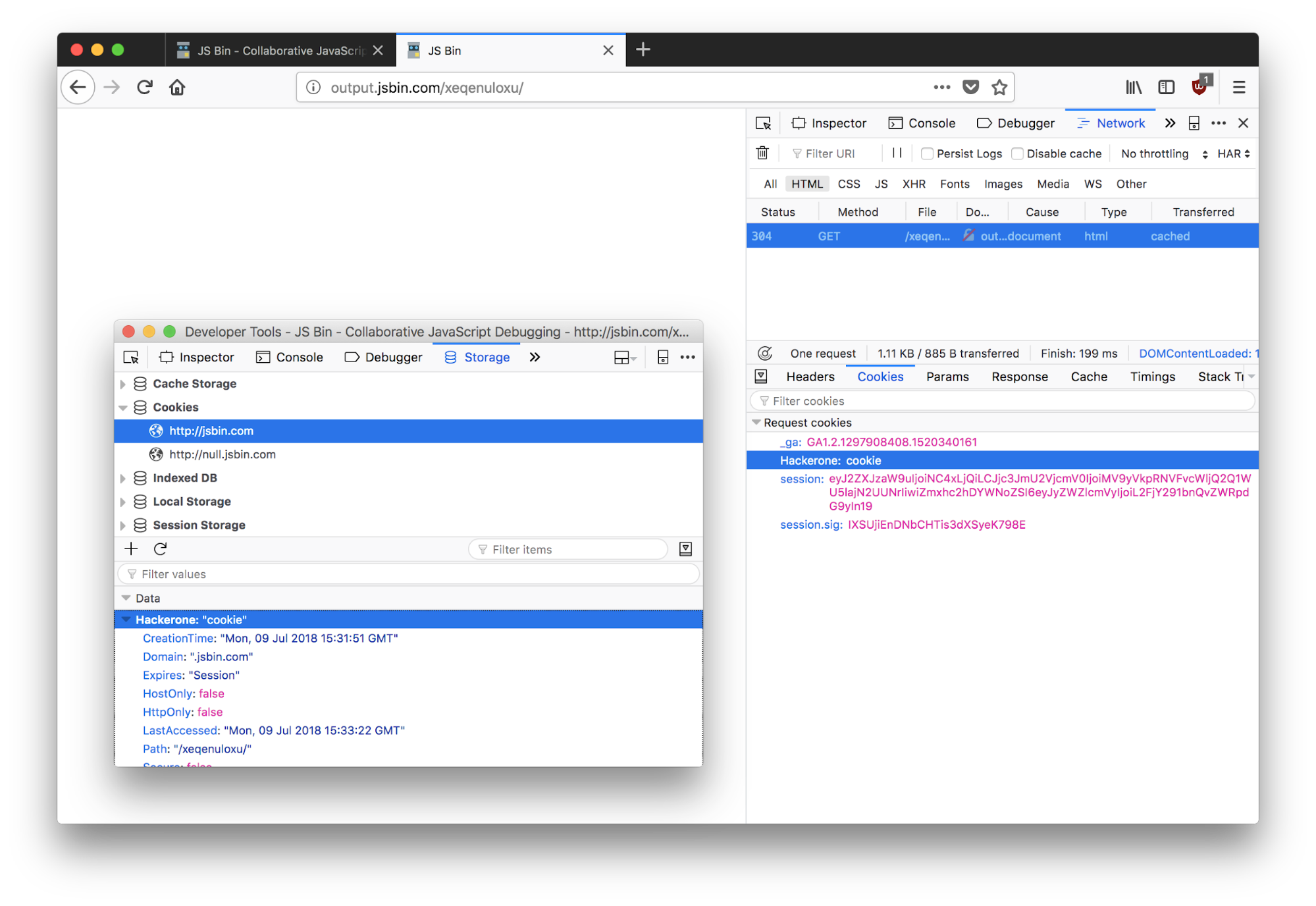

From output.jsbin.com, we can set cookies for jsbin.com.

If the base name is vulnerable to session fixation and uses HTTPOnly cookies, you can set a cookie and then when the user restarts their browser, your malicious cookie will take precedence over the newly generated cookie because cookies are sorted by age.

Cross-Origin Resource Sharing

Cross-Origin Resource Sharing (CORS), is a technology that allows a host to share contents of a page cross-origin. Applications create a scope with a set of rules that permits hosts to extract data including authenticated data. Some applications permit subdomains to make cross-origin HTTP requests with the assumption that subdomains are trusted entities. When you hijack a subdomain look for CORS headers — Burp Suite Pro's scanner usually picks them up — and see if the application whitelists subdomains. This could allow you to steal data from an authenticated user on the main application.

Oauth whitelisting

Similar to Cross-Origin Resource Sharing, the Oauth flow also has a whitelisting mechanic, whereby developers can specify which callback URIs should be accepted. The danger here once again is when subdomains have been whitelisted and therefore you can redirect users during the Oauth flow to your subdomain, potentially leaking their Oauth token.

Content-Security Policies

The Content-Security Policy (CSP) is yet another list of hosts that an application trusts, but the goal here is to restrict which hosts can execute client-side code in the context of the application. This header is particularly useful if one wants to minimise the impact of cross-site scripting. If your subdomain is included in the whitelist, you can use your subdomain to bypass the policy and execute malicious client-side code on the application.

$ curl -sI https://hackerone.com | grep -i "content-security-policy" |

Clickjacking

As described in the "Cure53 Browser Security White Paper", Internet Explorer, Edge, and Safari support the ALLOW-FROM directive in the X-Frame-Options header, which means if your subdomain is whitelisted, you can frame the target page and therefore perform clickjacking attacks.

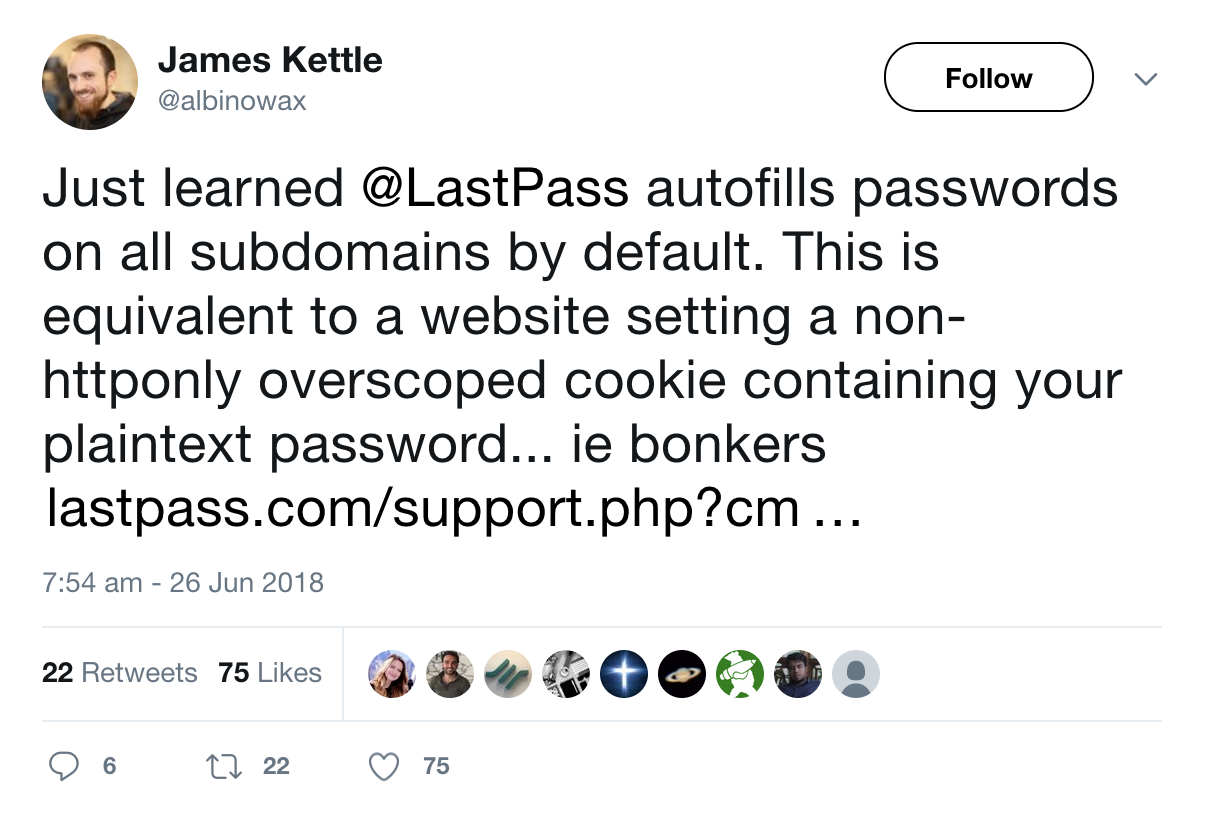

Password managers

This is not necessarily one that you would include in a report, but it is worth noting that some password managers will automatically fill out login forms on subdomains belonging to the main application.

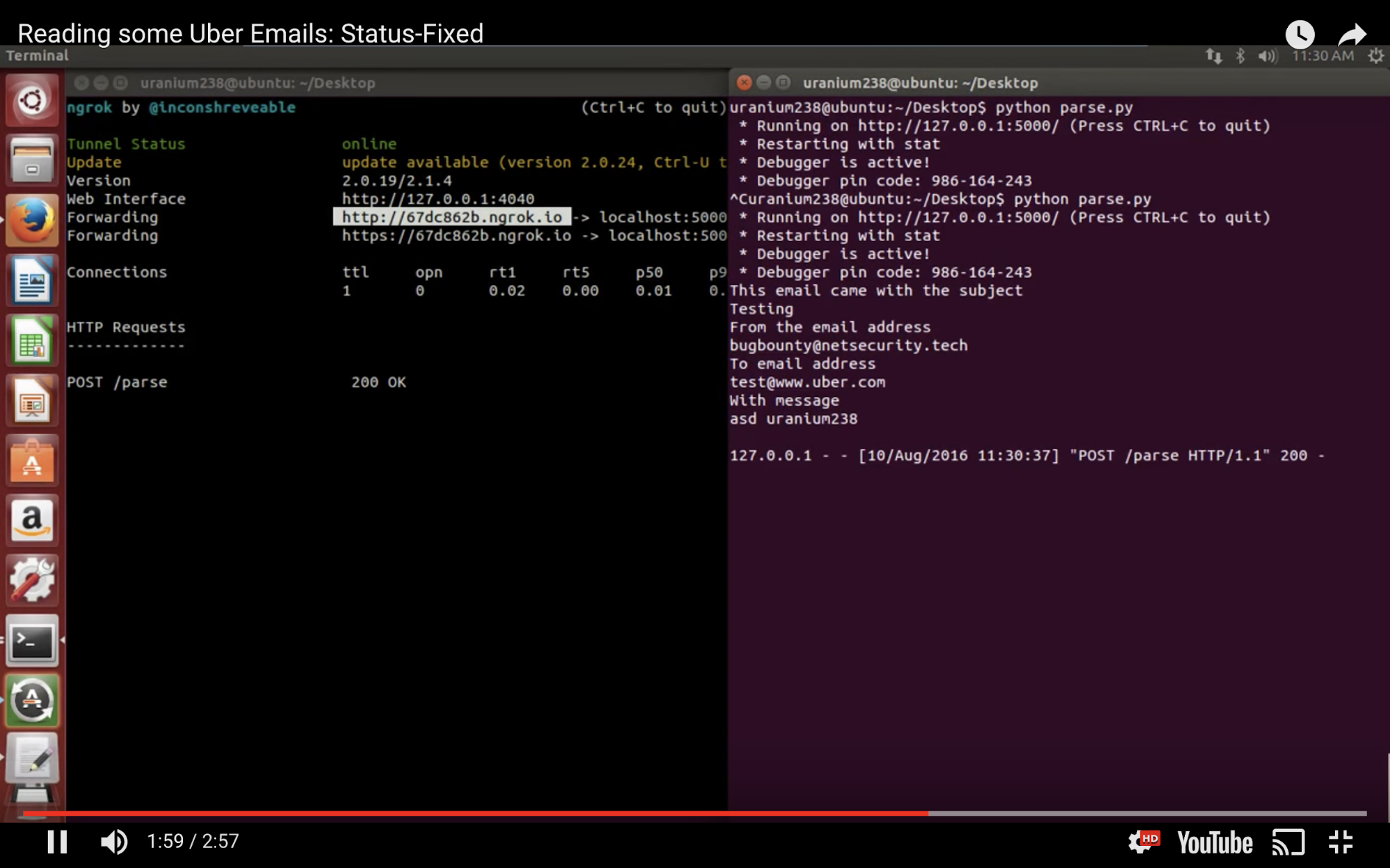

Intercepting emails

Rojan Rijal demonstrated how he was able to intercept emails by claiming a subdomain belonging to uber.com on SendGrid.

Reporting subdomain takeovers

Before you even attempt to report a subdomain takeover, make sure that you are in fact able to serve content on the subdomain. However, whatever you do, do not publish anything on the index page, even if it is a harmless picture of a frog as demonstrated earlier. It is best practice to serve an HTML file on a hidden path containing a secret message in an HTML comment. That should be enough to demonstrate the issue when initially contacting the programme about your finding. Only once the team has given you permission, should you attempt to escalate the issue and actually demonstrate the overall impact of the vulnerability. In most cases though, the team should already be aware of the impact and your report should contain information concerning the exploitability of subdomain takeovers.

Take your time when writing up a report about a subdomain takeover as this type of issue can be extremely rewarding and nobody can beat you to the report since you are — hopefully — the only one that has control over the subdomain.

Author's note: I have only ever witnessed one duplicate report for a subdomain takeover, so while there is still the possibility, the chances of this ever happening to you are fairly slim.

We have reached the end of this guide and I look forward to triaging your subdomain takeover reports on HackerOne. Remember to practice and apply the tricks listed in this write-up when hunting for subdomain takeovers.

On a final note, I would like to thank Frans Rosén, Filedescriptor, Mongo, and Tom Hudson for exchanging ideas concerning subdomain takeovers. Their research has been the foundation for a lot of what I had discovered throughout my journey.